Developing and Testing a Model to Understand Relationships

between e-Learning Outcomes and Human Factors

Sean B. Eom

1

and Nicholas J. Ashill

2

1

Department of Accounting, Southeast Missouri State University, 1 University Plaza, Cape Girardeau, U.S.A.

2

Department of Marketing, American University of Sharjah, Sharjah, U.A.E.

Keywords: e-Learning Outcomes, Human Factors, Student Satisfaction, Interaction.

Abstract: This study applies partial least squares (PLS) to examine the effects of interactions and instructor feedback

and facilitation on the students' satisfaction and their perceived learning outcomes in the context of

university online courses. Independent variables included in the study are course structure, self-motivation,

learning style, and interaction. A total of 397 valid unduplicated responses from students who have

completed at least one online course at a university in the Midwest U.S. are used to examine the structural

model. Four of the five antecedent constructs hypothesized to directly affect student/instructor interaction

are significant. This research makes a critical contribution in e-learning empirical research by identifying

two critical human factors that make e-learning a superior mode of instruction.

1 INTRODUCTION

According to a comprehensive online and blended

learning literature review (Arbaugh et al., 2009), e-

learning empirical researchers have accumulated

important findings in regard to potential predictors

of e-learning outcomes, control variables, and

criterion variables. This review identified two most

common research streams: first, a comparison of

learning outcomes between face-to-face and e-

learning course delivery modes; second, research

which examined potential predictors of e-learning

outcomes. Previous empirical studies in this area of

prediction of e-learning outcomes can be broadly

classified into (1) conceptual frameworks that

identify factors that affect e-learning outcomes and

learner satisfaction, (2) empirical studies that

examine a subset of factors on learning outcomes

(e.g., effects of e-learner characteristics such as

gender, age and e-learning experience), and (3)

empirical studies examining factors and their effects

on e-learning outcomes and (4) empirical studies

examining factors that make e-learning a superior

mode of instruction. Many research models that

identify the predictors of e-learning outcomes are

built on the conceptual frameworks of Piccoli et al.,

(2001) and Peltier et al., (2003). The former

identifies human and design factors as antecedents

of learning effectiveness. Human factors are

concerned with students and instructors, while

design factors characterize such variables as

technology, learner control, course content, and

interaction. The conceptual framework of online

education proposed by Peltier et al., (2003) consists

of instructor support and mentoring, instructor-to-

student interaction, student-to-student interaction,

course structure, course content, and information

delivery technology.

Empirical studies however, report conflicting

findings. Eom et al., (2006) for example, examined

the determinants of students' satisfaction and their

perceived learning outcomes in the context of

university online courses. Independent variables

included in this study were course structure,

instructor feedback, self-motivation, learning style,

interaction, and instructor facilitation as potential

determinants of online learning. The results

indicated that all of the antecedent variables

significantly affect students' satisfaction. Of the six

antecedent variables hypothesized to affect the

perceived learning outcomes, only instructor

feedback and learning style were significant.

Although this study represents an important

milestone in e-learning empirical research by

fundamentally shifting the focus of e-learning

empirical studies from simply identifying the

predictors of e-learning outcomes to identifying a

361

B. Eom S. and J. Ashill N..

Developing and Testing a Model to Understand Relationships between e-Learning Outcomes and Human Factors.

DOI: 10.5220/0004437803610370

In Proceedings of the 15th International Conference on Enterprise Information Systems (ICEIS-2013), pages 361-370

ISBN: 978-989-8565-60-0

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

subset of critical success factors that makes learning

outcomes surpass those provided in classroom-based

settings, some of the study’s findings run contrary to

other studies. For example, Eom et al. (2006) found

no support for a positive relationship between

interaction and perceived learning outcomes, a

finding that runs contrary to LaPointe and

Gunawardena (2004).

In the current study, we advance current research

by examining relationships between e-learning

outcomes and human factors, especially human

interactions, in university online education using e-

learning systems. Specifically, our conceptual model

examines the human interaction construct and we

place this construct as a mediating variable between

learning outcomes and other three constructs (course

structure, motivation, and learning styles). Using the

extant literature, we begin by introducing and

discussing the research model illustrating factors

affecting e-learning systems outcomes and e-learner

satisfaction. We follow this with a description of the

cross-sectional survey that was used to collect data

and the results from structural equation modeling

(SEM) analysis using PLS-Graph. PLS-based SEM

yields robust results despite that it does not have

measurement, distributional, or sample size

assumptions. In the final section, we summarize

and conclude with the implications of the results for

e-learning.

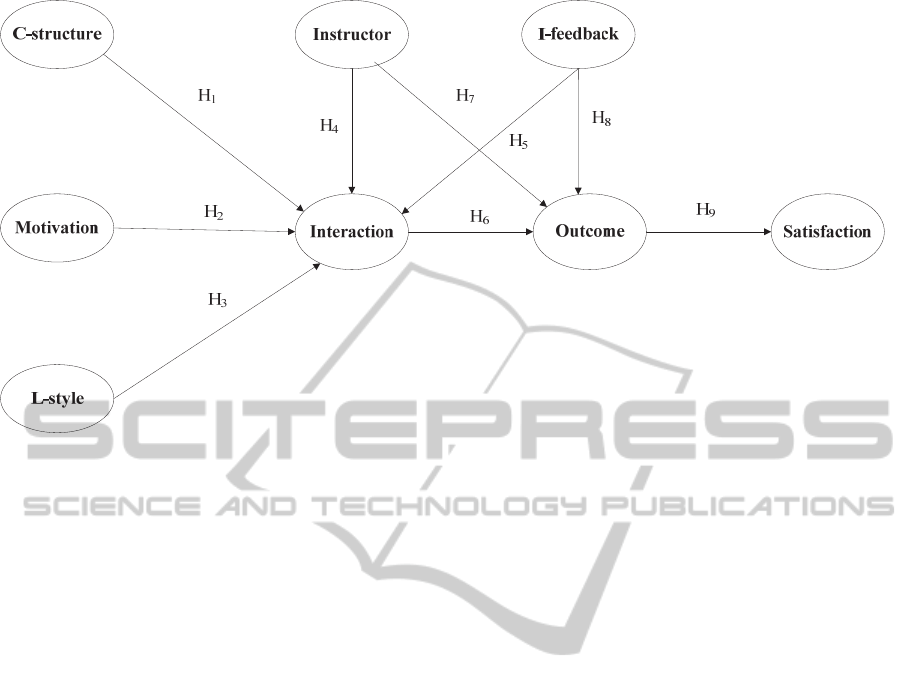

2 RESEARCH MODEL

Figure 1 summarizes research questions we attempt

to answer. There are three antecedents (course

structure, students’ motivation, and students’

learning styles) of interactions. The impact of three

constructs on learning outcomes is mediated by

interaction.

2.1 Antecedents of Interaction

e-Learning systems aim to maximize learning

outcomes using learning management systems. In

doing so, understanding widely accepted learning

theories is prerequisite to apply these learning

management systems and technologies, because it

defines different roles the instructor and students

have to play in the learning process and the roles of

dynamic interactions among human subsystems

(students, the instructor), technology, and contents in

the learning process. The most widespread learning

paradigms are the behaviorist paradigm of learning

(behaviorism), the constructive paradigm of learning

(constructivism) and the cognitive paradigm of

learning (an extension of constructivism). With

increasing adoption of e-learning, constructivism has

become the dominant learning theory.

Constructivism assumes that individuals learn better

when they control the pace of learning. Therefore,

the instructor supports learner-centered active

learning. Under the cooperative model of learning

(collaboratism), students learn as individual students

and verify, solidify, construct and improve shared

understanding of their mental models. It is necessary

for students to interact with other students and the

instructor in the form of active forum discussions,

private e-mails, teleconferencing and group project

completion. The interaction and active participation

enable students to construct and share the new

knowledge. In this learning process, student

involvement is critical to learning and the instructor

becomes a discussion leader. The socioculturism

model necessitates empowering students with

freedom and responsibilities since learning is

individualistic (Leidner and Jarvenpaa, 1995).

Moreover, e-learning places greater emphasis on the

constructive paradigm of learning (constructivism)

and the cognitive paradigm of learning (an extension

of constructivism). Consequently, the role of

interaction among students and between students

and the instructor in the e-learning process has

become critical.

The design dimension in Piccoli et al., (2001)

includes a wide range of constructs that affect

effectiveness of e-learning systems such as

technology, learner control, learning model, course

contents and structure, and interaction. Among the

many frameworks/taxonomies of interaction

(Northrup, 2002), this research adopts Moore’s

(1989) communication framework which classified

engagement in learning through (a) interaction

between participants and learning materials, (b)

interaction between participants and tutors/experts,

and (c) interactions among participants. These three

forms of interaction in online courses are recognized

as important and critical constructs determining the

performance of web-based course quality.

The community of inquiry model is another

useful framework that explains the roles of

interaction to achieve higher learning outcomes and

learner satisfaction. The community of inquiry

framework emphasizes the creation of an effective

online learning community that enhances and

supports learning and learner satisfaction (Akyol and

Garrison, 2011). The essence of the effective online

learning community is the creation of cognitive

presence, which is a condition/learning environment

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

362

Figure 1: Research model.

that facilitates higher-order thinking and deep and

meaningful learning (Garrison and Anderson, 2003).

Cognitive presence in the e-learning process

facilitates e-learners to freely exchange ideas and

information and connect ideas to construct new

knowledge. In doing so, interactions with students

and the instructor becomes a necessary ingredient in

the learning process. The next element of the

community of inquiry model is social presence.

According to Garrison (Garrison, 2009, p.352),

social presence is defined as “the ability of

participants to identify with community (e.g., course

of study), communicate purposefully in a trusting

environment, and develop interpersonal relationships

by way of projecting their individual personalities.”

2.1.1 Course Structure

Course structure is seen as a crucial variable that

affects the success of distance education along

interaction. According to Moore (1991, p.3), the

course structure “expresses the rigidity or flexibility

of the program's educational objectives, teaching

strategies, and evaluation methods” and the course

structure describes “the extent to which an education

program can accommodate or be responsive to each

learner's individual needs.”

Course structure has two structural elements -

course objectives/expectation and course

infrastructure. Course objectives/expectation are

specified in the course syllabus including expected

class participation in the form of online conferencing

systems and group project assignments. These

structural elements affect the interaction level. The

instructor’s efforts to generate interaction include

the online forum activities as part of grading

systems. Student’s attitude and behavior changes

significantly when the instructor assigns forum

activities as a grading component. We theorize that

course material that is organized into logical and

understandable components will lead to the high

levels of interaction between the instructor and

students and between students and students. Thus,

we hypothesize:

H

1

: There will be a positive relationship between

perceptions of course structure and

student/instructor interaction.

2.1.2 Student Self-Motivation

One of the stark contrasts between successful

students is their apparent ability to motivate

themselves, even when they do not have the burning

desire to complete a certain task. On the other hand,

less successful students tend to have difficulty in

calling up self-motivation skills, like goal setting,

verbal reinforcement, self-rewards, and punishment

control techniques (Dembo and Eaton, 2000). The

extant literature suggests that students with strong

motivation will be more successful and tend to learn

the most in web-based courses than those with less

motivation (Frankola, 2001); (LaRose and Whitten,

2000). Students' motivation is a major factor that

affects the attrition and completion rates in the web-

based course and a lack of motivation is also linked

to high dropout rates (Frankola, 2001); (Galusha,

DevelopingandTestingaModeltoUnderstandRelationshipsbetweene-LearningOutcomesandHumanFactors

363

1997). It is conceivable that a high level of student

motivation be positively related to a high level of

interaction with the instructor and students. Thus, we

hypothesize:

H

2

: There will be a positive relationship between

student motivation and student/instructor

interaction.

2.1.3 Students’ Learning Style

We assume that online learning systems may include

less sound or oral components than traditional face-

to-face course delivery systems and that online

learning systems have more proportion of read/write

assignment components, Students with visual

learning styles and read/write learning styles may do

better in online courses than their counterparts in

face-to-face courses. There are some empirical

studies that investigated the direct relationships

between interaction and students’ perceived learning

outcomes and satisfactions in university e-learning

(Eom et al., 2006). But there are few studies that

explore the interaction construct as a mediating

variable that connects three other attributes (learning

styles, motivation, and course structures).

It is conceivable that there is some association

between styles of learning and the level of

interaction. For example, Gardner’s theory of

multiple intelligence categorizes eight different

learning styles (Gardner, 1983). Of these,

interpersonal learners learn better when they work

together, while intrapersonal learners do better in

individual and self-paced projects by working alone.

Research indicates that learning styles can be

incorporated as a key feature for group formation,

which in turn may affect the final results of the tasks

accomplished by them collaboratively (Alfonseca et

al., 2006). This implicitly assumes a positive

association between learning styles and the level of

interaction. Therefore, we hypothesize:

H

3

: There will be a positive relationship between

visual and read/write learning styles and

student/instructor interaction.

2.1.4 Instructor

Some of widely accepted learning models are

objectivism, constructivism, collaborativism,

cognitive information processing, and

socioculturalism (Leidner and Jarvenpaa, 1995).

Distance learning can easily break a major

assumption of objectivism – instructor houses all

necessary knowledge. For this reason, distance

learning systems can utilize many other learning

models such as constructivist, collaboratism, and

socioculturism. Constructivism assumes that

individuals learn better when they control the pace

of learning. Therefore, the instructor supports

learner-centered active learning. Under the model of

collaboratism, student involvement is critical to

learning and the instructor becomes discussion

leader. Socioculturism models necessitate

empowering students with freedom and

responsibilities since learning is individualistic.

e-Learning environments demand a transition of

the roles of students and the instructor. The

instructor’s role is to become a facilitator who

stimulates, guides and challenges their students via

empowering them with freedom and responsibility,

rather than a lecturer who focuses on the delivery of

instruction. (Huynh, 2005). The importance of the

level of encouragement can be found in the model

proposed by Lam (2005). We added the two

questions to assess the roles of the instructor as the

facilitator and stimulator: The instructor was

actively involved in facilitating this course; The

instructor stimulated students to intellectual effort

beyond that required by face-to-face courses.

Therefore, we hypothesize:

H

4

: There will be a positive relationship between

instructor knowledge and facilitation and

student/instructor interaction.

H

7

: There will be a positive relationship between

instructor knowledge and facilitation and

learning outcomes.

2.1.5 Instructor Feedback

Instructor feedback to the learner is defined as

information a learner receives about his or her

learning process and achievement outcomes (Butler

and Winne, 1995) and it is “one of the most

powerful component in the learning process” (Dick

and Carey, 1990, p.165). It intends to improve

student performance via informing students how

well they are doing and via directing students

learning efforts. Instructor feedback in the Web-

based system includes the simplest cognitive

feedback (e.g., exam/assignment with his or her

answer marked wrong), diagnostic feedback (e.g.,

exam/assignment with instructor comments why the

answers are correct or incorrect), prescriptive

feedback (instructor feedback suggesting how the

correct responses can be constructed) via replies to

student e-mails, graded work with comments, online

grade books, and synchronous and asynchronous

commentary.

Instructor feedback to students can improve

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

364

learner affective responses, increase cognitive skills

and knowledge, and activate metacognition.

Metacognition refers to the awareness and control of

cognition through planning, monitoring, and

regulating cognitive activities (Pintrich et al., 1991).

Metacognitive feedback concerning learner progress

directs the learner’s attention to learning outcomes

(Ley, 1999). When metacognition is activated,

students may become self-regulated learners. They

can set specific learning outcomes and monitor the

effectiveness of their learning methods or strategies

(Chen, 2002); (Zimmerman, 1989). Therefore, we

hypothesize:

H

5

: There will be a positive relationship between

instructor feedback and student/instructor

interaction.

H

8

: There will be a positive relationship between

instructor feedback and learning outcomes.

2.2 Consequences of Interaction

Interaction between participants in online courses

has been recognized as the most important construct

of the dimensions determining Web-based course

quality. Hence, many studies have shown that

interaction is highly correlated to the learning

effectiveness of Web-based courses and most

students who reported higher levels of interaction

with content, instructor, and peers reported higher

levels of satisfaction and higher levels of learning.

(Moore, 1989); (Swan, 2001); (Vaverek and

Saunders, 1993).

Interaction with the Instructor: The learner-

instructor interaction involves direct interaction

between instructor and learner, and may be initiated

by either. Interactions include answering questions

about both course content and organization,

providing personal examples of class material,

demonstrating a sense of humor about the course

material, and, importantly, inviting students to seek

feedback (Arbaugh, 2001); (Saltzberg and Polyson,

1995). High levels of learner-instructor interaction

are positively related with levels of satisfaction with

the course and levels of learning (Arbaugh, 2000);

(Swan, 2001). Furthermore, Picciano (1998)

discovered that students perceive learning from

online courses to be related to the amount of

discussion actually taking place in them. When

students actively participate in an intellectual

exchange with fellow students and the instructor,

students verbalize what they are learning in a course

and articulate their current understanding(Chi and

VanLehn, 1991). Therefore, we hypothesize:

H

6

: There will be a positive relationship between

interaction and learning outcomes.

The last hypothesis tests a positive association

between learning outcomes and students’

satisfaction. Depending on how each indicator of

user satisfaction and learning outcomes are

measured, these construct can be reciprocal. In this

study, learning outcomes are measured by perceived

level of learning, perceived quality of the learning

experience in online courses, whereas user

satisfaction is measured by the degree of willingness

by students to take an online course and to

recommend the course taken to other students in the

future. Consequently, learning outcomes precede

user satisfaction. Therefore we hypothesize:

H

9

: There will be a positive relationship between

learning outcomes and user satisfaction.

3 SURVEY INSTRUMENT

The survey instrument was designed after

conducting an extensive literature review and

adapting items from the commonly administered

IDEA (Individual Development & Educational

Assessment) student rating systems developed by

Kansas State University (see Appendix A). In an

effort to survey students using technology-enhanced

e-learning systems, we focused on students enrolled

in Web-based courses with no on campus meetings.

A survey URL and instructions were sent to 1,854

student email addresses that were collected from

student data files associated with every online course

delivered through the online program of a university

in the Midwest of the United States. Three hundred

and ninety seven valid unduplicated responses were

collected from the survey.

4 RESEARCH METHODOLOGY

The hypotheses were tested using a quantitative

survey of satisfaction and learning outcome

perceptions of students who had taken at least one

online course at a large Midwestern university in the

United States. Relationships between variables were

tested using the structural equation modeling (SEM)

tool PLS graph version 3.0, build 1126.

4.1 Measurement Model Estimation

The test of the measurement model included an

estimation of the internal consistency and the

DevelopingandTestingaModeltoUnderstandRelationshipsbetweene-LearningOutcomesandHumanFactors

365

convergent and discriminant validity of the

instrument items.

Table 1: Convergent and discriminant validity of the

model constructs.

loading t-statistic#

Course Structure

(ic=0.88 ave =0.73)

Struc1 0.8346 14.8330

Struc2 0.8850 18.2017

Struc3 0.8324 11.7576

Instructor Feedback

(ic = 0.93 ave = 0.77)

Feed1 0.8722 24.2528

Feed2 0.8286 18.8518

Feed3 0.9045 30.9556

Feed4 0.9035 26.8712

Self-Motivation

(ic = 0.79 ave = 0.66)

Moti1 0.7156 7.1801

Moti2 0.8989 14.2685

Learning Style

(ic = 0.81 ave = 0.67)

Styl1 0.8681 7.8532

Styl2 0.7707 5.4517

Interaction

(ic = 0.76 ave = 0.62)

Intr1 0.9258 17.1161

Intr2 0.6063 6.9865

Instructor Knowledge

& Facilitation

(ic = 0.89 ave = 0.73)

Inst1 0.8396 17.0887

Inst2 0.9026 26.9581

Inst3 0.8125 17.9503

User Satisfaction

(ic = 0.90 ave = 0.76)

Sati1 0.8676 28.3807

Sati2 0.8984 35.8619

Sati3 0.8398 30.6980

Learning Outcomes

(ic = 0.91 ave = 0.78

Outc1 0.8678 22.5078

Outc2 0.8887 33.3322

Outc3 0.8930 35.1271

Note: ‘ic’ is internal consistency measure; ‘ave’ is average

variance extracted.

# All significant p < .05.

The composite reliability of a block of indicators

measuring a construct was assessed with two

measures - the composite reliability measure of

internal consistency and average variance extracted

(AVE). All reliability measures were above the

recommended level of 0.70 (Table 1), thus

indicating adequate internal consistency (Fornell and

Bookstein, 1982); (Nunnally, 1978). The average

variance extracted scores (AVE) were also above the

minimum threshold of 0.5 (Chin, 1998b); (Fornell

and Larcker, 1981) and ranged from 0.62 to 0.78

(see Table 1). When AVE is greater than 0.50, the

variance shared with a construct and its measures is

greater than error. This level was achieved for all of

the model constructs.

Convergent validity is demonstrated when items

load highly (loading >0.50) on their associated

factors. Loadings of 0.5 are considered acceptable if

there are additional indicators in the block for

comparative purposes (Chin, 1998b). Ideally

however, they should be 0.7 or higher. Table 1

shows that with the exception of one item, all

loadings were above 0.7 for the items measuring

each of the eight constructs.

Discriminant validity was firstly assessed by

examining the cross-loadings of the constructs and

the measures. This analysis revealed that the

correlations of each construct with its measures were

higher than the correlations with any other measures.

Second, the square root of the average variance

extracted (AVE) for each construct was compared

with the correlation between the construct and other

constructs in the model (Chin, 1998b); (Fornell and

Larcker, 1981). Table 2 shows that the square root of

each AVE is larger than any correlation among any

pair of constructs thus indicating discriminant

validity.

Table 2: Correlation among construct scores (square root

of AVE in the diagonal).

CS IF SM LS

INT

IKF US LO

CS

.85

IF .72

.87

SM .26 .23

.81

LS .29 .21 .25

.82

INT .44 .59 .38 .26

.79

IKF .68 .80 .25 .26 .55

.85

US .74 .69 .36 .41 .53 .71

.87

LO .56 .49 .35 .44 .45 .55 .78

.88

4.2 Structural Model Results

Consistent with the distribution free, predictive

approach of PLS (Wold, 1985), the structural model

was evaluated using the R

2

for the dependent

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

366

constructs, the Stone-Geisser Q-square test (Geisser,

1975); (Stone, 1974) for predictive relevance, and

the size, t-statistics and significance level of the

structural path coefficients. The t-statistics were

estimated using the bootstrap resampling procedure

(100 resamples). The results of the structural model

are summarized in Table 3.

Table 3: Structural (inner) model results.

Dependent variables

Interaction

Learning

Outcomes

User

Satisfaction

Constructs

Instructor

Knowledge &

Facilitation

.151** .384**** -

Instructor

Feedback

.466**** .077 ns -

Course

Structure

-.086 ns - -

Self-

Motivation

.238**** - -

Learning Style .088** - -

Student/Instruc

tor Interaction

- .189*** -

User

Satisfaction

- - -

Learning

Outcomes

- - .526****

P-values: **** 0.001, *** 0.01, **0.05, ns - not significant

Effect on Interaction R

2

=0.44, Learning Outcomes R

2

=0.33,

Effect on User Satisfaction R

2

=0.61.

The results show that the structural model

explains 43.7 percent of the variance in the

student/instructor interaction construct, 33.4 percent

of the variance in the learning outcomes construct

and 61.2 percent of the variance in the user

satisfaction construct. The percentage of variance

explained for these primary dependent variables is

greater than 10 percent implying satisfactory and

substantive value and predictive power of the PLS

model (Falk and Miller, 1992).

As can be seen from the results, four of the five

antecedent constructs hypothesized to directly affect

student/instructor interaction are significant. The

magnitude of the path coefficients however, indicate

that instructor feedback (β = 0.47, t = 5.83) and self-

motivation (β = 0.24, t = 4.90) are stronger

predictors of interaction relative to instructor

knowledge and facilitation (β = 0.15, t = 2.09) and

learning style (β = 0.09, t = 1.93). Course structure

has no significant relationship with

student/instructor interaction (β = -0.09, t = 1.46).

H1 is therefore rejected while support exists for H2-

H5.

Two of the three antecedent constructs

hypothesized to directly affect learning outcomes are

significant - instructor knowledge/facilitation (β =

0.38, t = 5.37) and student/instructor interaction (β =

0.19, t = 3.02). H

6

and H

7

are therefore supported.

Instructor feedback has no significant impact on

learning outcomes (β = 0.08, t = 0.94). H

8

is

therefore rejected. Finally, the hypothesized direct

relationship between learning outcomes and user

satisfaction is significant (β = 0.78, t = 41.74). H

9

is

therefore supported.

Although PLS estimation does not utilize formal

indices to assess overall goodness-of-fit (GoF) such

as GFI, CFI, chi-square values, NNFI and RMSEA,

it can be demonstrated by strong factor loadings,

high R

2

values and substantial and statistically

significant structural paths (Chin, 1998a; 1998b);

(Tenenhaus et al., 2005). Tenenhaus et al., (2005)

have also developed an additional GoF measure for

PLS based on taking the square root of the product

of the variance extracted with all constructs with

multiple indicators and the average R

2

value of the

endogenous constructs. In the currents study the

GoF measure is .577 which indicates very good fit

(Cohen, 1988).

In addition to examining R

2

, the PLS model was

also evaluated by looking at the Q-square for

predictive relevance for the model constructs. Q-

square is a measure of how well the observed values

are reproduced by the model and its parameter

estimates. Q-squares greater than 0 indicate that the

model has predictive relevance, whereas Q-squares

less than 0 suggest that the model lacks predictive

relevance. In the current study, Q-square values are

0.15 for student/instructor interaction, 0.09 for

learning outcomes and 0.42 for user satisfaction.

5 CONCLUSIONS

This study, applying structural equation modeling,

examines the effects of interactions and instructor

feedback and facilitation on students' satisfaction

and their perceived learning outcomes in the context

of university online courses. Independent variables

included in the study are course structure, self-

motivation, learning style, and interaction. A total of

397 valid unduplicated responses from students who

have completed at least one online course at a

university in the Midwest were used to examine the

structural model.

DevelopingandTestingaModeltoUnderstandRelationshipsbetweene-LearningOutcomesandHumanFactors

367

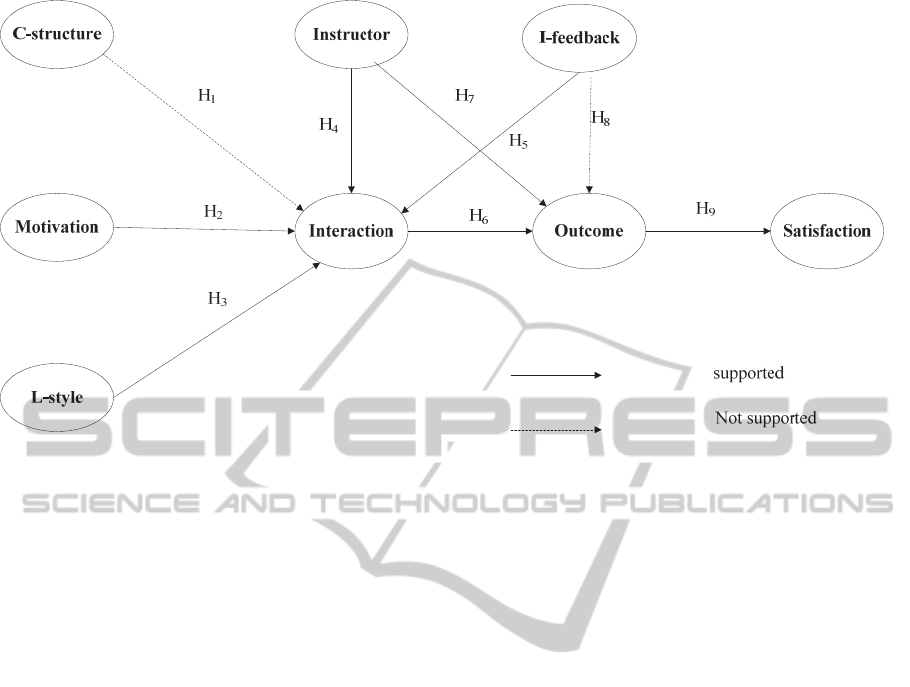

Figure 2: Structural model results.

The results indicate that four of the five

antecedent constructs hypothesized to directly affect

student/instructor interaction are significant. The

magnitude of the path coefficients however, indicate

that instructor feedback and self-motivation are

stronger predictors of interaction relative to

instructor knowledge and facilitation and learning

style. Course structure has no significant relationship

with student/instructor interaction. Two of the three

antecedent constructs hypothesized to directly affect

learning outcomes are significant – instructor

knowledge/facilitation and student/instructor

interaction. Instructor feedback has no significant

impact on learning outcomes. Finally, the

hypothesized direct relationship between learning

outcomes and user satisfaction is significant.

One of the crucial research questions we

attempted to answer was the relationship between

interaction and perceived learning outcomes.

Contrary to previous research (LaPointe and

Gunawardena, 2004), the study of Eom et al., (2006)

found no support for a positive relationship between

interaction and perceived learning outcomes.

However, the new research model (figure 1) in the

current study presents interaction as the key

mediating variable along with instructor feedback

and facilitation. Consequently, interactions with the

instructor and among students are a strong predictor

of student learning outcomes due to the combination

of direct effects of interaction on students learning

outcomes and indirect effects of course structure,

motivation, and learning styles on students learning

outcomes.

Our research attempted to include a mediating

variable (interaction) to connect course structure and

learning outcomes. A possible explanation for the

statistically insignificant relationship between online

course structure and perceived learning outcomes is

that all indicators of course structure may have not

included expected class participation in the form of

online conferencing systems.

Our results indicated that instructor feedback and

self-motivation are stronger predictors of interaction

relative to instructor knowledge and facilitation and

learning style. Therefore, self-motivation is

indirectly affecting students learning outcomes via

the interaction construct.

This research, along with the study of Eom, et al.

(Eom et al., 2006) has made a critical contribution in

e-learning empirical research by identifying two

critical human factors that make e-learning a

superior mode of instruction. In our view, this is a

significant shift of direction in e-learning empirical

research. Our research provides strong empirical

evidence that online education is not a universal

innovation applicable to all types of instructional

situations. Online education can be a superior mode

of instruction if it is targeted to learners with specific

learning styles (visual and read/write learning styles)

(Eom et al., 2006) and students personality

characteristics (Schniederjans and Kim, 2005) and

with timely, helpful instructor feedback of various

types. Proper management of human factors can

change the dynamics of e-learning process to

produce e-learning outcomes surpass those provided

in classroom-based settings. Technology in e-

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

368

learning is just an instructional tool. We conclude

that instructor’s facilitating roles and feedback is the

most critical factor in e-learning that changes the e-

learning process positively and that changes learner-

instructor relationship positively to make e-learning

a superior mode of instruction.

REFERENCES

Akyol, Z. and Garrison, D. R. (2011) In Student

Satisfaction and Learning Outcomes in e-Learning:

An introduction to empirical research (Eds, Eom, S.

B. and Arbaugh, J. B.) Information Science Reference

(an imprint of IGI Global), Hersey, PA, pp. 23-35.

Alexander, M. W., Perrault, H., Zhao, J. J. and Waldman,

L. (2009) In Journal of Educators Online, Vol. 6.

Alfonseca, E., Carro, R. M., Martín, E., Ortigosa, A. and

Paredes, P. (2006) User Modeling and User - Adapted

Interaction, 16, 377-401.

Arbaugh, J. B. (2000) Business Communication Quarterly,

63, 9-18.

Arbaugh, J. B. (2001) Business Communication Quarterly,

64, 42-54.

Arbaugh, J. B., Godfrey, M. R., Johnson, M., Pollack, B.

L., Niendorf, B. and Wresch, W. (2009) Internet and

Higher Education, 12, 71-87.

Bonk, C. J. (2009) The World is Open: How Web

Technology is Revolutionizing Education Jossey-Bass,

San Francisco, CA

Butler, D. L. and Winne, P. H. (1995) Review of

Educational Research, 65, 245-281.

Chen, C. S. (2002) Information Technology, Learning, and

Performance Journal, 20, 11-25.

Chi, M. T. and VanLehn, K. A. (1991) The Journal of

Learning Sciences, 1, 69-105.

Chin, W. W. (1998a) MIS Quarterly, 22, VII-XVI.

Chin, W. W. (1998b) In Modern Methods for Business

Research(Ed, Marcoulides, G. A.) Lawrence Erlbaum

Associates, Mahwah, NJ, pp. 295-336.

Cohen, J. (1988) Statistical Power Analysis for the

Behavioral Sciences Lawrence Erlbaum Associates,

publishers, Hillsdale, NJ.

Dembo, M. and Eaton, M. (2000) The Elementary School

Journal, 100, 473-490.

Dick, W. and Carey, L. (1990) The Systematic Design of

Instruction, Harper Collins Publishers, New York,

NY.

Dunn, R., Beaudry, J. and Klavas, A. (1989) Education

Leadership, 46, 50-58.

Eom, S. B., Ashill, N. and Wen, H. J. (2006) Decision

Sciences Journal of Innovative Education, 4, 215-236.

Falk, R. F. and Miller, N. B. (1992) A primer for soft

modeling, The University of Akron Press, Akron, OH.

Fornell, C. R. and Bookstein, F. L. (1982) In A second

Generation of multivariate analysis(Ed, Fornell, C.)

Praeger, New York, pp. 289-394

Fornell, C. R. and Larcker, D. (1981) Journal of

Marketing Research, 18, 39-50.

Frankola, K. (2001) Workforce, 80, 53-60.

Galusha, J. M. (1997) Interpersonal Computing and

Technology: An Electronic Journal for the 21st

Century, 5, 6-14.

Gardner, H. (1983) Frames of Mind: The Theory of

Multiple Intelligences, Basic Books, New York.

Garrison, D. R. (2009) In Encyclopedia of distance and

online learning learning(Ed, Howard, C.) IGI Global,

Hersey, PA, pp. 352-355.

Garrison, D. R. and Anderson, T. (2003) E-learning in the

21st century: A framework for research and practice,

Routledge/Falmer, London, UK.

Geisser, S. (1975) Journal of the American Statistical

Association, 70, 320-328.

Hayes, S. K. (2007) MERLOT Journal of Online Teaching

and Learning, 3, 460-465.

Haytko, D. L. (2001) Marketing Education Review, 11,

27-39.

Huynh, M. Q. (2005) Journal of Electronic Commerce in

Organizations, 3, 33-45.

Kellogg, D. L. and Smith, M. A. (2009) Decision Sciences

Journal of Innovative Education, 7, 433-454.

Lam, W. (2005) Journal of Electronic Commerce in

Organizations, 3, 18-41.

LaPointe, D. K. and Gunawardena, C. N. (2004) Distance

Education, 25, 83-106.

LaRose, R. and Whitten, P. (2000) Communication

Education, 49, 320-338.

Leidner, D. E. and Jarvenpaa, S. L. (1995) MIS Quarterly,

19, 265-291.

Ley, K. (1999) Campus-Wide Information Systems, 16,

63-70.

Liu, X., Magjuka, R. J., Bonk, C. J. and Lee, S. (2007)

Quarterly Review of Distance Education, 8, 9-24.

Marriott, N., Marriott, P. and Selwyn, N. (2004)

Accounting Education, 13, 117-130.

Means, B., Toyama, Y., Murphy, R., Bakia, M. and Jones,

K. (2009) U.S. Department of Education, Washington,

DC.

Moore, M. G. (1989) The American Journal of Distance

Education, 3, 1-6.

Moore, M. G. (1991) The American Journal of Distance

Education, 5, 1-6.

Morgan, G. and Adams, J. (2009) Journal of Interactive

Learning Research, 20, 129-155.

Northrup, P. T. (2002) The Quarterly Review of Distance

Education, 3, 219-226.

Nunnally, J. C. (1978) Psychometric Theory, McGraw-

Hill, New York.

Peltier, J. W., Drago, W. and Schibrowsky, J. A. (2003)

Journal of Marketing Education, 25, 260-276.

Picciano, A. (1998) Journal of Asynchronous Learning

Networks, 12, 1-14.

Piccoli, G., Ahmad, R. and Ives, B. (2001) MIS Quarterly,

25, 401-426.

Pintrich, P. R., Smith, D. A., Garcia, T. and McKeachie,

W. J. (1991) A manual for the use of the Motivated

Strategies for Learning Questionnaire (MSLQ),

National Center for Research to Improve

Postsecondary Teaching and Learning, Ann Arbor:

DevelopingandTestingaModeltoUnderstandRelationshipsbetweene-LearningOutcomesandHumanFactors

369

University of Michigan.

Saltzberg, S. and Polyson, S. (1995) Syllabus, 9, 26-28.

Sarker, S. and Nicholson, J. (2005) Informing Science, 8,

55-73.

Schniederjans, M. J. and Kim, E. B. (2005) Decision

Sciences: Journal of Innovative Education, 3, 205-

221.

Sitzmann, T., Kraiger, K., Stewart, D. and Wisher, R.

(2006) Personnel Psychology, 59, 623-664.

Stone, M. (1974) Journal of The Royal Statistical Society,

36, 111-147.

Swan, K. (2001) Distance Education, 22, 306-331.

Tanner, J. R., Noser, T. C. and Totaro, M. W. (2009)

Journal of Information Systems Education, 20, 29-40.

Tenenhaus, M., Vinzi, V. E., Yves-Marie, C. and Lauro,

C. (2005) Computational Statistics and Data Analysis,

48, 159-205.

Vaverek, K. A. and Saunders, C. (1993) Journal of

Educational Technology Systems, 22, 23-39.

Wilkes, R. B., Simon, J. C. and Brooks, L. D. (2006)

Journal of Information Systems Education, 17, 131-

140.

Wold, H. (1985) In Measuring The Unmeasurable (Eds,

Nijkamp, P., Leitner, H. and Wrigley, N.) Martinus

Nijhoff, Dordrecht, pp. 221-252.

Zimmerman, B. J. (1989) In Self regulated learning and

academic achievement: Theory, research, and practice

(Eds, Zimmerman, B. J. and Schunk, D. H.) Springer-

Verlag, New York, pp. 1-25.

APPENDIX

Instructor

Inst1 = The instructor was very knowledgeable

about the course

Inst2 = The instructor was actively involved in

facilitating this course

Inst3 = The instructor stimulated students to

intellectual effort beyond that required by face-to-

face courses.

Course Structure

Struc1 =The overall usability of the course Web site

was good.

Struc2 =The course objectives and procedures were

clearly communicated.

Struc3 =The course material was organized into

logical and understandable components.

Feedback

Feed1 = The instructor was responsive to student

concerns.

Feed2 = The instructor provided timely feedback

on assignments, exams, or projects.

Feed3 = The instructor provided helpful timely

feedback on assignments, exams, or projects.

Feed 4 = I felt as if the instructor cared about my

individual learning in this course.

Self-Motivation

Moti1 = I am goal directed, if I set my sights on a

result, I usually can achieve it.

Moti2 = I put forth the same effort in on-line courses

as I would in a face-to-face course.

Learning Style

Styl1 = I prefer to express my ideas and thoughts in

writing, as opposed to oral expression.

Styl2 = I understand directions better when I see a

map than when I receive oral directions.

Interaction

Intr1 = I frequently interacted with the instructor in

this on-line course.

Intr2 = I frequently interacted with other students in

this on-line course.

OUTPUTS

User Satisfaction

Sati1 = The academic quality was on par with face-

to-face courses I’ve taken.

Sati2 = I would recommend this course to other

students.

Sati3 = I would take an on-line course at Southeast

again in the future.

Learning Outcomes

Outc1 = I feel that I learned as much from this

course as I might have from a face-to-face version of

the course.

Outc2 = I feel that I learn more in on-line courses

than in face-to-face courses.

Outc3 = The quality of the learning experience in

on-line courses is better than in face-to-face courses.

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

370