Interactive Narration Requires Interaction and Emotion

A. Pauchet

1

, F. Rioult

2

, E. Chanoni

3

, Z. Ales

1

and O. S¸erban

1

1

INSA Rouen, LITIS, Rouen, France

2

Universit

´

e de Caen, Greyc, Caen, France

3

Universit

´

e de Rouen, Psy-NCA, Rouen, France

Keywords:

Dialogue Modelling, Knowledge Extraction, Narrative Embodied Conversational Agent.

Abstract:

This paper shows how interaction is essential for storytelling with a child. A corpus of narrative dialogues

between parents and their children was coded with a mentalist grid. The results of two modelling methods

were analysed by an expert in parent-child dialogue analysis. The extraction of dialogue patterns reveals

regularities explaining the character’s emotion. Results showed that the most efficient models contain at least

one request for attention and/or emotion.

1 INTRODUCTION

Designing a dialogue model is a difficult and of-

ten multidisciplinary task. It involves many algo-

rithms: multi-modal inputs, natural language under-

standing and generation, dialogue management, emo-

tion, ... In particular, multi-modal and affective di-

alogue management remains inefficient in Embodied

Conversational Agents (ECA) (Cassell et al., 2000),

even though this aspect is essential for interaction

(Swartout et al., 2006). With the emergence of partic-

ipative digital storytelling systems, child - humanoid

agent interaction situations are increasing. The dia-

logue model embedded into an ECA, when dedicated

to interactive storytelling, should be designed accord-

ing to the child’s socio-cognitive and language skills.

We aim at designing a method for modelling the

dialogue between parents and children and tools to

ease the extraction of regularities from interactive di-

alogues. We therefore propose hints to guide an inter-

active narrative session as well as dialogue patterns

extracted from the corpora as a model of dialogue for

narrative ECAs.

2 METHOD AND MATERIAL

The method proposed to model the dialogue is de-

scribed in (Ales et al., 2012). It consists in: 1) col-

lecting and digitizing a corpus of dialogues; 2) the

transcription and coding step produces raw data with

various levels of details (speaking slots, utterances,

onomatopoeia, pauses, ...) depending on the phenom-

ena and characteristics which the model must exhibit;

3) knowledge and regularity extractions are applied,

through the utterances coded during the previous step.

This knowledge consists in the regularities of the dia-

logues and constitutes the model; 4) finally, the model

is exploited for interactive storytelling.

Corpus of Narrative Dialogues

Modelling storytelling dialogues requires knowledge

extraction from a corpus of non mediated parent-

child storytelling dialogues. In this study, 30 dia-

logues between children and parents (ages: 3, 4 and

5) were recorded during emotional story telling situa-

tions. These records have been transcribed and coded

with a mentalist grid (Chanoni, 2009) to capture in-

formation about the mental states (beliefs, emotions,

...) contained by the various utterances.

Matrix Representation of Dialogues

As outlined by Bunt, dialogue management involves

multilevel aspects (Bunt, 2011). To design a dia-

logue model that supports multidimensionality, a ma-

trix representation is chosen for annotations. Each

utterance is characterised by an annotation vector,

whose components match the different coding dimen-

sions. A dialogue is represented by a matrix: one row

by utterance, one column by coding dimension.

To illustrate this two-dimensional representation,

Table 1 presents an example of encoded parent-child

dialogue, extracted from the collected corpus. Each

utterance is characterised by a line number, a speaker

(P: parent, C: child), a transcription and its encoding

527

Pauchet A., Rioult F., Chanoni E., Ales Z. and ¸Serban O..

Interactive Narration Requires Interaction and Emotion.

DOI: 10.5220/0004259605270530

In Proceedings of the 5th International Conference on Agents and Artificial Intelligence (ICAART-2013), pages 527-530

ISBN: 978-989-8565-39-6

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

Table 1: 2D annotations of a narrative dialogue between a parent and his/her child.

Line Speaker Utterance Annotations

31 P Who could have stolen the crown? Q - F - -

32 C The crown, it’s in! A - F - -

33 P Do you believe it? Q H K - -

34 C Yes A - F - -

35 P But Babar doesn’t know that it’s in A P N O J

36 P So he says that the crown is a bomb A P N C J

annotations.

The annotation grid corresponds to the follow-

ing coding scheme: Column 1: an (A)ffirmation, a

(Q)uery, a request for paying attention to the story

(D), or a demand for general attention (G). Column 2:

reference of the utterance. It can refer to the character

(P), the interlocutor (H) or the speaker (R). Column

3: an (E)motion, a (V)olition, an observable or a non-

observable cognition (B or N), an epistemic statement

(K), an assumption (Y) or a (S)urprise. The surprise is

distinguished from other emotions because of its link

with the incidental belief. Columns 4 and 5: expla-

nations with cause / (C)onsequence, (O)pposition or

empathy (M), which can be applied either to explain

the story (J), or to precise a situation with a personal

context (F).

3 KNOWLEDGE EXTRACTION

Dialogue Pattern Extraction

With our matrix representation, a dialogue pattern is

defined as a set of annotations which occurs in several

dialogues. The method designed to extract significant

dialogue patterns consists in a regularity extraction

step based on matrix alignment using dynamic pro-

gramming and a clustering step using machine learn-

ing heuristics to group and select the recurrent dia-

logue patterns. The clustering process is applied on

a similarity graph computed during the matrix align-

ment.

The method for extracting two-dimensional pat-

terns is a generalisation of the local string edition dis-

tance. The edit distance between two string S

1

and

S

2

corresponds to the minimal cost of the three ele-

mentary edit operations (insertion, deletion and sub-

stitution of characters) for converting S

1

to S

2

. Two-

dimensional pattern extraction corresponds to matrix

alignment. A local alignment of two matrices M

1

and

M

2

, of size m

1

× n

1

and m

2

× n

2

respectively, consists

in finding the portion of M

1

and M

2

which are the

most similar. To this end, a four-dimensional matrix

T of size (m

1

+ 1) × (n

1

+ 1) × (m

2

+ 1) × (n

2

+ 1)

is computed, such that T [i][ j][k][l] is equal to the lo-

cal edition distance between S

1

[0..i − 1][0.. j − 1] and

S

2

[0..k −1][0..l −1] for all i ∈ J1, m

1

−1K, j ∈ J1, n

1

−

1K, k ∈ J1, m

2

− 1K and l ∈ J1,n

2

− 1K. In our heuris-

tic, the calculation of T is obtained by the minimisa-

tion of a recurrence formula. Once T is computed, the

best local alignment is found from the position of the

maximal value in T , through a trace-back algorithm

to infer the characters which are part of the align-

ment. Figure 1, commented in Section 4, presents

an example of alignment extracted from the corpus.

Details about the two dimensional pattern extraction

algorithm can be found in (Lecroq et al., 2012).

The matrix alignment algorithm extracts the pat-

terns in pairs. To determine the importance of each

pattern, we group them using various standard cluster-

ing heuristics The idea is that large clusters of patterns

represent behaviours which are commonly adopted

by humans, whereas small clusters tend to contain

marginal patterns. A matrix of similarities between

patterns is computed through a global edition distance

applied on all pairs of selected patterns. This similar-

ity matrix is used as input for the clustering heuristics.

The method has been tested on the corpus of nar-

rative dialogues. During the extraction phase, 1740

dialogue patterns have been collected.

Predicting the Interaction of the Child

As our goal is to build a dialogue model dedicated to

narrative ECAs that stimulates child interaction, we

have to model the arising of the child’s interaction,

focusing on event prediction. In other words, we look

for sequences of dialogue events leading to child’s in-

teraction. We split the data over each turn of utter-

ance, in other words over each sequence of parents as-

sertion or question and child’s interaction. The prob-

lem consists therefore in predicting the end of each

turn.

The matrices that encode dialogues are considered

as sequences of features, each sequence ending with

the child’s interaction. For instance, the sequences

corresponding to Table 1, are: < (QF) >, < (QHK) >

, < (APNOJ)(APNOJ) >

The algorithm, without candidate enumeration,

mines the episodes with recursive projections, in a

greedy manner. The combinatorial explosion is lim-

ited with two anti-monotone constraints: the support

of the currently computed episode (the number of se-

ICAART2013-InternationalConferenceonAgentsandArtificialIntelligence

528

quences it appears in) and the average distances (in

utterances) to the end of the sequences supporting it.

As the mining process is directed from the end of

the sequences to their beginning, not all the computed

episodes are relevant for the prediction of the end.

For instance, if each sequence begins and ends with

a (Q)uestion, the above algorithm will give < (Q) >

as an end predictor, while it is also a good predictor

for the beginning. To avoid these bad cases, the aver-

age length of each computed episode has to be taken

into account. If it is too small, the episode is not kept.

This process ensures that the mined regularities are

relevant for the prediction of the child’s interaction.

4 ANALYSIS OF THE MODELS

Patterns of Dialogue

Figure 1 presents an example of one pattern align-

ment appearing in two dialogues. This pattern shows

that parents firstly talked about the cause or the con-

sequence of the character’s behaviour (P, C, J), with-

out reference to any mental state. After assertions or

descriptive questions, parents insisted on the justifi-

cation of the character’s behaviour (line 6), directly

with relation to the emotional state of the character

(line 7). Finally, the parent checked if the child under-

stood, with asking questions or by requesting his/her

attention (line 8).

Figure 1: Example of dialogue pattern alignment.

This pattern perfectly demonstrates that an emo-

tion cannot only be named to be explained. The pat-

tern described the link between the character’s be-

haviour and the mental state, the later explaining the

former.

Prediction of Interaction

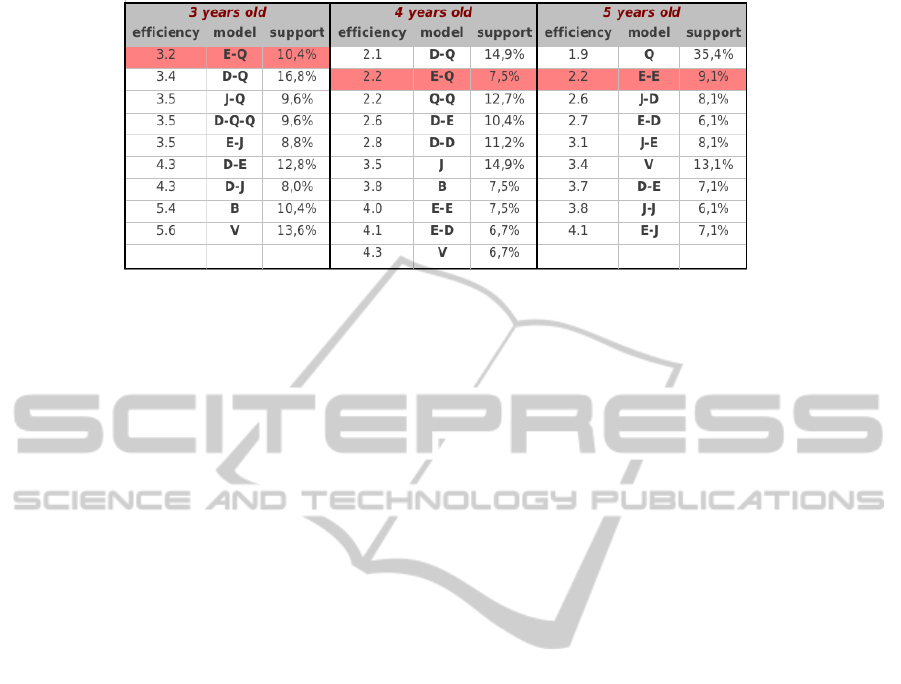

Table 2 sums up the models for the child’s interac-

tions. For each age, the models are characterised by

the efficiency (average number of sentences between

the model and the child’s interaction. The more a se-

quence is efficient, the fewer sentences before child’s

interaction) and the support (the percentage of times

the model appears).

We highlight the important following facts : 1)

Regardless of the age, sequences with justifica-

tions were frequently associated with various indexes

(emotion, request for attention or question). The

child’s interaction came after 3.1 to 4.3 sentences af-

ter the model. 2) the quickness of the interaction de-

creased with the age, from 3.2 to 1.9 sentences. 3) the

number of sequences with emotion is merely equiv-

alent for all ages. Nevertheless, the older the child

is, the more different the sequences of emotion are.

Complex sequences (emotion and justification: J-E or

E-J) appear only with the oldest children. 4) except

with request for attention, the most efficient models

(in red in Table 2) contained emotion (E-Q or E-E).

5 DIALOGUE MODELLING

FOR NARRATIVE ECAS

ECAs are autonomous and anthropomorphic ani-

mated characters with multi-modal communication

skills (Cassell et al., 2000). Greta (Pelachaud, 2009)

and the European project SEMAINE (Schroder,

2010) provide good examples of current capabili-

ties of ECAs. With regard to dialogue systems and

models, which could be integrated into ECAs, sev-

eral approaches exist. The finite-state approach (ex:

(McTear, 2004)) which represents the structure of the

dialogue as a finite-state automaton where each ut-

terance leads to a new state. The frame-based ap-

proach represents the dialogue as a process of filling

in a form containing slots (ex: (Aust et al., 1995)).

The plan-based approach (Allen and Perrault, 1980)

combines plan recognition with the Speech Act the-

ory (Searle, 1969). The logic-based approach repre-

sents the dialogue and its context in some logical for-

malism (ex: (Hulstijn, 2000)). Finally, the machine

learning approach proposes techniques such as rein-

forcement learning (Frampton and Lemon, 2009) to

model the dialogue with Markov Decision Processes.

Most of the existing ECAs only integrates basic

dialogue management processes, such as a keyword

spotter within a finite-state or a frame-based approach

(ex: the SEMAINE project (Schroder, 2010)). They

uses regular structures that can only represent linear

interaction patterns, whereas dialogue management

involves multi-dimensional levels (Bunt, 2011). The

model we propose combines planning management

for the task resolution (prediction of the child interac-

tion) and a more reactive management when dealing

with dialogical conventions (dialogue patterns), all

along multidimensionality management through the

matrix coding of dialogues.

InteractiveNarrationRequiresInteractionandEmotion

529

Figure 2: Efficiency and quantity of all sequences for each age.

6 CONCLUSIONS

We have presented a methodology and tools designed

to improve dialogue models that could be integrated

in narrative ECAs. The proposed methodology con-

sists in extracting dialogue patterns, clustering to en-

code dialogical conventions and an event prediction

approach to plan the interactions of the listener. A

matrix representation of interactions is used to encode

the multidimensional aspects of dialogues. The algo-

rithms were applied to a corpus of child-parent inter-

actions during narration. Finally, we have shown why

interactive narration requires prominent interactions

and emotion.

REFERENCES

Ales, Z., Duplessis, G. D., ¸Serban, O., and Pauchet, A.

(2012). A methodology to design human-like embod-

ied conversational agents based on dialogue analysis.

In Proc. of HAIDM@AAMAS, pages 34–49, Valencia,

Spain.

Allen, J. and Perrault, C. (1980). Analyzing intention in

utterances. AI magazine, 15(3):143–178.

Aust, H., Oerder, M., Seide, F., and Steinbiss, V. (1995).

The philips automatic train timetable information sys-

tem. Speech Communication, 17(3-4):249–262.

Bunt, H. (2011). Multifunctionality in dialogue. Computer

Speech and Language, 25(2):222–245.

Cassell, J., Bickmore, T., Campbell, L., Vilhj

´

almsson, H.,

and Yan, H. (2000). Embodied conversational agents.

chapter Human conversation as a system framework:

designing embodied conversational agents, pages 29–

63. MIT Press.

Chanoni, E. (2009). Comment les m

`

eres racontent une his-

toire de fausses croyances

`

a leur enfant de 3

`

a 5 ans ?

Number 2, pages 181–189.

Frampton, M. and Lemon, O. (2009). Recent research

advances in reinforcement learning in spoken di-

alogue systems. Knowledge Engineering Review,

24(04):375–408.

Hulstijn, J. (2000). Dialogue games are recipes for joint

action. In Proc. of Gotalog’00.

Lecroq, T., Pauchet, A., and Solano, E. C. E. G. A. (2012).

Pattern discovery in annotated dialogues using dy-

namic programming. In IJIIDS, volume 6, pages 603–

618.

McTear, M. (2004). Spoken dialogue technology: toward

the conversational user interface. Springer-Verlag

New York Inc.

Pelachaud, C. (2009). Modelling multimodal expression of

emotion in a virtual agent. Philosophical Trans. of the

Royal Society B: Biological Sciences, 364(1535).

Schroder, M. (2010). The SEMAINE API: towards

a standards-based framework for building emotion-

oriented systems. Advances in HCI, 2010:2–2.

Searle, J. (1969). Speech Acts: An Essay in the Philosophy

of Language. Cambridge University.

Swartout, W. R., Gratch, J., Jr., R. W. H., Hovy, E. H.,

Marsella, S., Rickel, J., and Traum, D. R. (2006). To-

ward virtual humans. AI Magazine, 27(2):96–108.

ICAART2013-InternationalConferenceonAgentsandArtificialIntelligence

530