HYBRID APPROACH FOR IMPROVED PARTICLE SWARM

OPTIMIZATION USING ADAPTIVE PLAN SYSTEM

WITH GENETIC ALGORITHM

Pham Ngoc Hieu and Hiroshi Hasegawa

Department of Systems Engineering and Science, Shibaura Institute of Technology, Saitama, Japan

Keywords:

Particle swarm optimization (PSO), Genetic algorithms (GAs), Adaptive system, Multi-peak problems.

Abstract:

To reduce a large amount of calculation cost and to improve the convergence to the optimal solution for

multi-peak optimization problems with multi-dimensions, we purpose a new method of Adaptive plan sys-

tem with Genetic Algorithm (APGA). This is an approach for Improved Particle Swarm Optimization (PSO)

using APGA. The hybrid strategy using APGA is introduced into PSO operator (H-PSOGA) to improve the

convergence towards the optimal solution. The H-PSOGA is applied to some benchmark functions with 20

dimensions to evaluate its performance.

1 INTRODUCTION

The product design is becoming more and more com-

plex for various requirements from customers and

claims. As a consequence, its design problem seems

to be multi-peak problem with multi-dimensions. The

Genetic Algorithm (GA) (Goldberg, 1989) is the most

emergent computing method has been applied to var-

ious multi-peak optimization problems. The valid-

ity of this method has been reported by many re-

searchers. However, it requires a huge computational

cost to obtain stability in the convergence to an op-

timal solution. To reduce the cost and to improve

stability, a strategy that combines global and local

search methods becomes necessary. As for this strat-

egy, current research has proposed various methods.

For instance, Memetic Algorithms (MAs) are a class

of stochastic global search heuristics in which Evolu-

tionary Algorithms-based approaches (EAs) are com-

bined with local search techniques to improve the

quality of the solutions created by evolution. MAs

have proven very successful across the search abil-

ity for multi-peak functions with multiple dimensions

(Smith et al., 2005). These methodologies need to

choose suitably a best local search method from vari-

ous local search methods for combining with a global

search method within the optimization process. Fur-

thermore, since genetic operators are employed for a

global search method within these algorithms, design

variable vectors which are renewed via a local search

are encoded into its genes many times at its GA pro-

cess. These certainly have the potential to break its

improvedchromosomesvia gene manipulation by GA

operators, even if these approaches choose a proper

survival strategy.

To solve these problems and maintain the sta-

bility of the convergence to an optimal solution for

multi-peak optimization problems with multiple di-

mensions, Hasegawa et al. proposed a new evolution-

ary algorithm (EA) called an Adaptive Plan system

with Genetic Algorithm (APGA) (Hasegawa, 2007).

Recently, especially, meta-heuristics receive a lot

of attentions. There is Particle Swarm Optimization

(PSO) has been developed by Kennedy and Eberhart

(2001). PSO is the method using simple iterative cal-

culations, thus it is easy to create the program source.

Therefore, PSO is applicable to wide-ranging opti-

mization problems. However, it might be difficult

to find the global optimal solution when it comes to

complex objective functions which have a lot of lo-

cal optimal solutions. The main problem of PSO is

that it prematurely converges to stable point which is

not necessarily optimum. To resolve this problem,

various improvement algorithms are proposed. It is

proven to be a successful in solving a variety of opti-

mal problems.

In this paper, we purposed a Hybrid strategy for

Improved PSO using APGA (H-PSOGA) to converge

to the optimal solution.

This paper is organized in the following manner.

267

Ngoc Hieu P. and Hasegawa H..

HYBRID APPROACH FOR IMPROVED PARTICLE SWARM OPTIMIZATION USING ADAPTIVE PLAN SYSTEM WITH GENETIC ALGORITHM.

DOI: 10.5220/0003626202670272

In Proceedings of the International Conference on Evolutionary Computation Theory and Applications (ECTA-2011), pages 267-272

ISBN: 978-989-8425-83-6

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

The concept of PSO is described in Section 2, con-

cept of APGA is in Section 3. Section 4 explains the

algorithm of proposed strategy (H-PSOGA), and Sec-

tion 5 discussed about the convergence to the optimal

solution of multi-peak benchmark functions. Finally,

Section 6 includes some brief conclusions.

2 PARTICLE SWARM

OPTIMIZATION

2.1 PSO Algorithms

Particle Swarm Optimization (PSO) is a population

based stochastic optimization technique inspired by

a social behavior of bird flocking or fish schooling

(Kennedy and Eberhart, 2001).

PSO is an algorithm of a multipoint search type

that is effective to solve the optimization problem. It

can be said that PSO has a high versatility because it

can search whether the objective function has contin-

uousness or differentiability, and using only informa-

tion of the objective function value (Shi and Eberhart,

1998).

We are concerned here with gbest-model which is

known to be conventional PSO. In gbest-model, each

particle which makes up a swarm has information of

its position and velocity at the present in the search

space. And it has information of the best solution

found so far of itself as pbest and of a whole swarm as

gbest. Each particle aims at the global optimal solu-

tion by updating next velocity making use of the po-

sition at the present, pbest and gbest, as following

equation:

v

k+1

ij

= wv

k

ij

+ c

1

rand

1

()

ij

pbest

k

ij

−x

k

ij

+c

2

rand

2

()

ij

gbest

k

j

−x

k

ij

(1)

x

k+1

ij

= x

k

ij

+ v

k+1

ij

(2)

Where v and x are velocity and position respec-

tively; i is the particle number,and j indicates the j-th

component of these vectors; pbest

i

is the best position

that j-th particle has ever found; gbest is the best posi-

tion which particles has ever found; rand

1

and rand

2

are two uniformly distributed random values indepen-

dently generated within [0, 1] for the k-th dimension;

w is the inertia weight; c

1

and c

2

are the acceleration

coefficients.

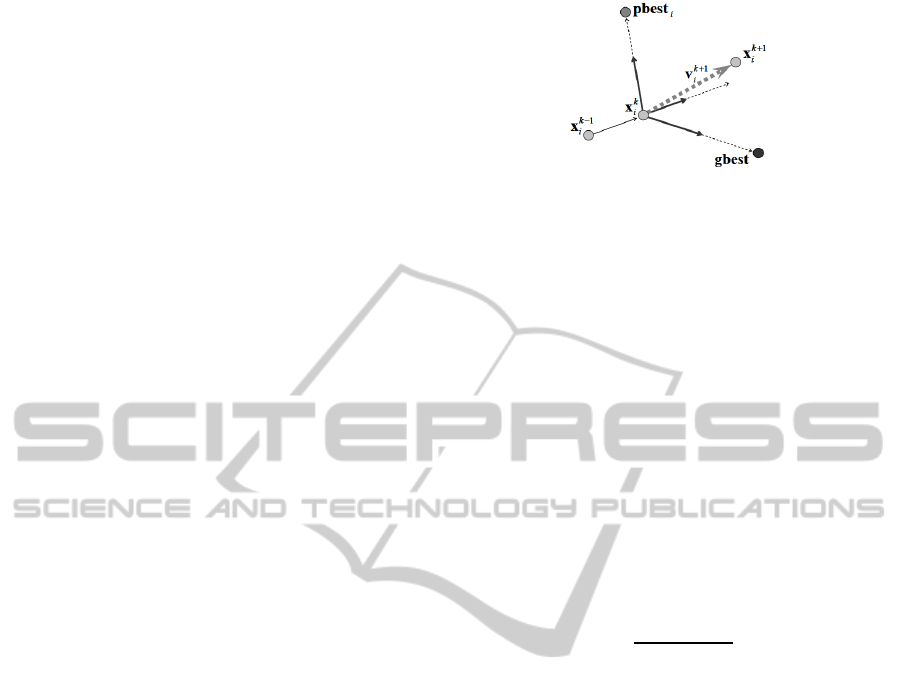

The position of each particle is updated with (2)

by the velocity updated in (1) as shown in Figure 1.

After a number of iterations, PSO is going to get the

global optimal solution as conclusive gbest.

Figure 1: Image of PSO Algorithm.

2.2 Improved PSO

Given its simple concept and effectiveness, the PSO

has become a popular optimizer and has widely been

applied in practical problem solving. Meanwhile,

much research on performance improvements has

been reported including parameter studies, combina-

tion with auxiliary operations, and topological struc-

tures.

Linearly Decreasing Inertia Weight (LDIW) is the

technique of basic PSO introduced by Shi and Eber-

hart (1998). The inertia weight w in (1) linearly de-

creasing with the iterative generation. In this method,

the value of w is adopted below:

w = w

max

−

w

max

−w

min

iteration

max

iteration (3)

Where the maximal and minimal weights w

max

and w

min

are respectively set 0.9, 0.4 known from ex-

perience.

In PSO algorithm, the concept of diversification

and intensification is quite important, because it de-

cides the characteristic of the swarm and the search

performance. By using (3), the particles can be trans-

formed from diversification to intensification by de-

creasing the inertia weight linearly as the search pro-

ceeds.

In addition to the inertia weight, the acceleration

coefficients c

1

and c

2

are also important parameters

in PSO. Both acceleration coefficients are essential to

the success of PSO. Kenedy and Eberhart (2001) sug-

gested a fixed value of 2.0, and this configuration has

been adopted by many other researchers.

Another active research trend in PSO is hybrid

PSO, which combines PSO with other evolutionary

paradigms. All techniques have also been hybridized

with PSO to enhance performance and to prevent the

swarm from crowding too closely and to locate as

many optimal solutions as possible. For a current re-

view of hybrid approaches to PSO the reader is re-

ferred to, e.g, Zhan et al. (2009).

ECTA 2011 - International Conference on Evolutionary Computation Theory and Applications

268

3 ADAPTIVE SYSTEM WITH

GENETIC ALGORITHM

3.1 The Optimization Problem

The optimization problem is formulated in this sec-

tion. Design variable, objective function and con-

strain condition are defined as follows:

Design variable : X = [x

1

, . . . , x

n

] (4)

Objective function : −f(X) → Max (5)

Constrain condition : X

LB

≤ X ≤ X

UB

(6)

Where X

LB

=

x

LB

1

, . . . , x

LB

n

, X

UB

=

x

UB

1

, . . . , x

UB

n

and n denote the lower boundary

condition vectors, the upper boundary condition

vectors and the number of design variable vectors

(DVs) respectively. A number of DV’s significant

figure is defined, and DV is rounded off its places

within optimization process.

3.2 APGA

The APGA concept was introduced as a new EA strat-

egy for multi-peak optimization problems. Its con-

cept differs in handling DVs from general EAs based

on GAs. EAs generally encode DVs into the genes

of a chromosome, and handle them through GA op-

erators. However, APGA completely separates DVs

of global search and local search methods. It en-

codes Control variable vectors (CVs) of AP into its

genes on Adaptive system (AS). Moreover, this sep-

aration strategy for DVs and chromosomes can solve

Memetic Algorithm (MA) problem of breaking chro-

mosomes (Smith et al., 2005). The conceptual pro-

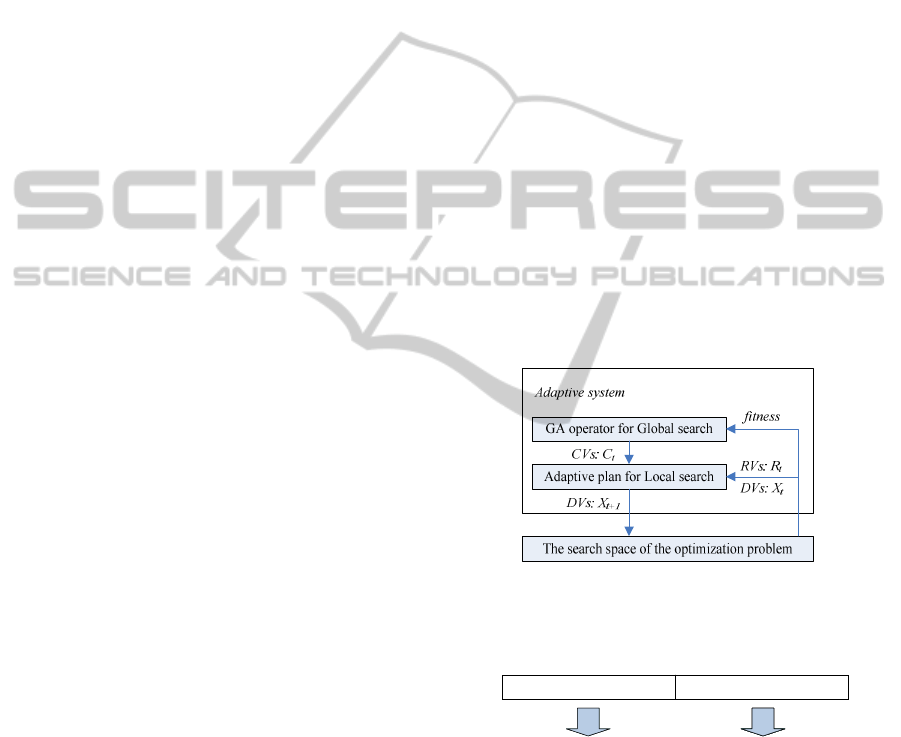

cess of APGA is shown in Figure 2. The control

variable vectors (CVs) steer the behavior of adaptive

plan (AP) for a global search, and are renewed via ge-

netic operations by estimating fitness value. For a lo-

cal search, AP generates next values of DVs by using

CVs, response value vectors (RVs) and current values

of DVs according to the formula:

X

t+1

= X

t

+ NR

t

·AP(C

t

, R

t

) (7)

Where NR, AP(), X, C, R, t denote neighbor-

hood ratio, a function of AP, DVs, CVs, RVs

and generation, respectively. In addition, for a

verification of APGA search process, refer to ref.

(Sousuke Tooyama, 2009) (Pham Ngoc Hieu, 2010).

3.3 Adaptive Plan

It is necessary that the AP realizes a local search pro-

cess by applying various heuristics rules. In this pa-

per, the plan introduces a DV generation formula us-

ing a sensitivity analysis that is effective in the convex

function problem as a heuristic rule, because a multi-

peak problem is combined of convex functions. This

plan uses the following equation:

AP(C

t

, R

t

) = −Scale·SP·sign(∇R

t

) (8)

SP = 2C

t

−1 (9)

Where Scale, ∇R denote the scale factor and sen-

sitivity of RVs, respectively. A step size SP is defined

by CVs for controlling a global behavior to prevent it

falling into the local optimum. C = [c

i, j

, . . . , c

i, p

];

(0.0 ≤ c

i, j

≤ 1.0) is used by (9) so that it can change

the direction to improve or worsen the objective func-

tion, and C is encoded into a chromosome by 10 bit

strings as shown in Figure 3. In addition, i, j and p are

the individual number, design variable number and its

size, respectively.

Figure 2: Conceptual process of APGA.

0 0 0 1 0 1 0 0 0 0

0 0 0 0 0 1 0 1 0 0

c

i,

1

=

80/1023 =

0.07820

c

i,

2

=

20/1023 =

0.01955

0 0 0 1 0 1 0 0 0 0

Individual

i

Step size

c

i,

1

of

x

1

:

Step size

c

i,

2

of

x

2

:

0 0 0 1 0 1 0 0 0 0

0 0 0 0 0 1 0 1 0 0

c

i,

1

=

80/1023 =

0.07820

c

i,

2

=

20/1023 =

0.01955

0 0 0 1 0 1 0 0 0 0

Individual

i

Step size

c

i,

1

of

x

1

:

Step size

c

i,

2

of

x

2

:

Figure 3: Encoding into genes of a chromosome.

3.4 GA Operators

3.4.1 Selection

Selection is performed using a tournament strategy to

maintain the diverseness of individuals with a goal of

keeping off an early convergence. A tournament size

of 2 is used.

HYBRID APPROACH FOR IMPROVED PARTICLE SWARM OPTIMIZATION USING ADAPTIVE PLAN SYSTEM

WITH GENETIC ALGORITHM

269

3.4.2 Elite Strategy

An elite strategy, where the best individual survives in

the next generation, is adopted during each generation

process.

It is necessary to assume that the best individual,

i.e., as for the elite individual, generates two behav-

iors of AP by updating DVs with AP, not GA oper-

ators. Therefore, its strategy replicates the best indi-

vidual to two elite individuals, and keeps them to next

generation.

3.4.3 Crossover and Mutation

In order to pick up the best values of each CV, a single

point crossover is used for the string of each CV. This

can be considered to be a uniform crossover for the

string of the chromosome.

Mutation are performed for each string at muta-

tion ratio on each generation, and set to maintain the

strings diverse.

3.4.4 Recombination

At following conditions, the genetic information on

chromosome of individual is recombined by uniform

random function.

• One fitness value occupies 80% of the fitness of

all individuals.

• One chromosome occupies 80% of the popula-

tion.

If this manipulation is applied to general GAs, an

improved chromosome into which DVs have been en-

coded is broken down. However, in the APGA, the

genetic information is only CVs used to make a deci-

sion for the AP behavior. Therefore, to prevent from

falling into a local optimum, and to get out from the

condition of being converged with a local optimum,

a new AP behavior is provided by recombining the

genes of the CVs into a chromosome. And the opti-

mal search process starts to re-explore by a new one.

This strategy is believed to make behavior like the re-

annealing of an SA.

4 HYBRID PSO AND APGA

Many of optimization techniques called meta-

heuristics including PSO are designed based on the

concept. In case of PSO, when a particle discovers a

good solution, other particles also gather around the

solution. Therefore, they cannot escape from a local

optimal solution. Consequently, PSO cannot achieve

the global search. However, APGA that combines the

global search ability of a GA and an Adaptive Plan

with excellent local search ability is superior to other

EAs (MAs). With a view to global search, we pro-

pose the newalgorithm based on PSO for getting hints

from APGA named H-PSOGA. The communication

between PSO and APGA is mentioned below.

4.1 The Concept of Proposed Method

4.1.1 Parallel Method (Type 1)

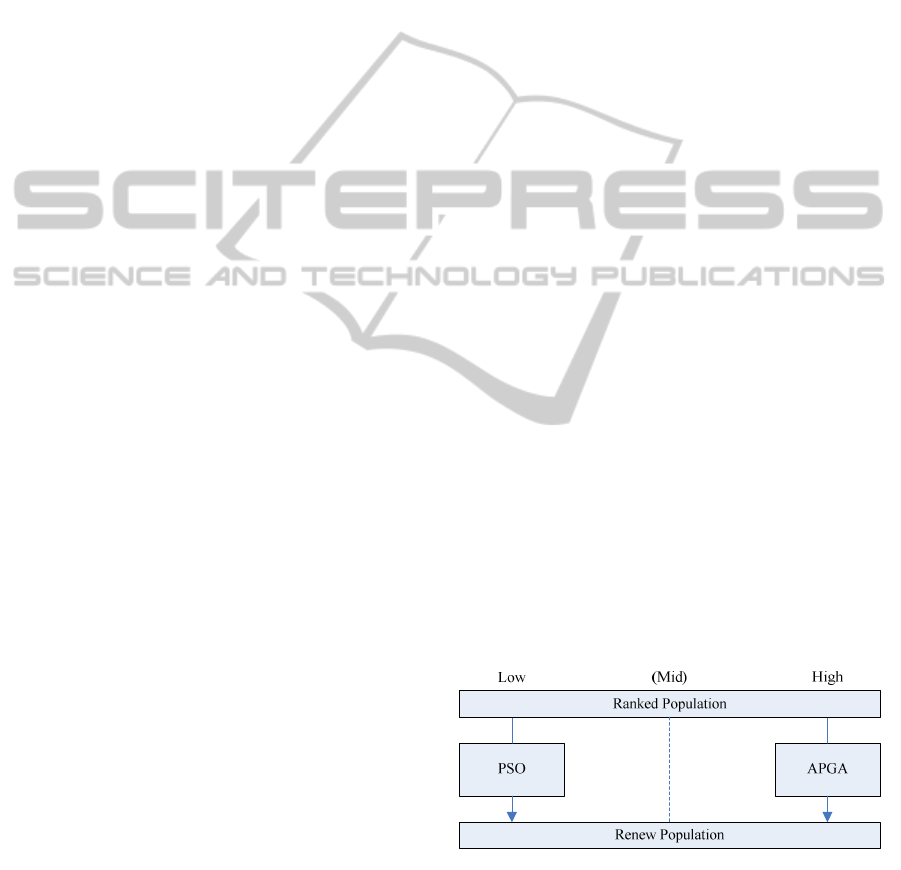

H-PSOGA Type 1 sorts all of individuals by estimat-

ing their fitness then ranks them by these sorting re-

sults. In this method, both PSO and APGA are run

in parallel, with one half of individual number is ad-

justed to PSO operator and another half one is deter-

mined by APGA process. This type maintains the it-

eration of PSO and APGA for the entire run, which

consists chiefly of genetic algorithm (GA), combined

with PSO and the sequential steps of the algorithm are

given below. Briefly, the operations are illustrated in

Figure 4.

Step 1: Sort all of individuals by estimating their

fitness value then rank them by results.

Step 2: The good individuals (high fitness values)

are evolved with APGA and procedure offspring.

Step 3: The low individuals (low fitness values)

are evolved with PSO and their best position is up-

dated with velocity.

Step 4: Combine as the new population and cal-

culate their fitness, choose the best position as the

global.

Step 5: Repeat steps 2-4 until the termination such

as a sufficiently good solution or a maximum number

of generations being completed, is satisfied.

The best scoring individual in the population is

taken as the global optimal solution.

Figure 4: H-PSOGA Type 1.

4.1.2 Serial Method (Type 2)

H-PSOGA Type 2 aims at getting the direction from

particle swarm to adjust into APGA. This method in-

troduces a DV generation formula using the veloc-

ECTA 2011 - International Conference on Evolutionary Computation Theory and Applications

270

ity update from PSO operator, not sensitivity analy-

sis. AP generates next values of DVs by using CVs,

velocity v and current values of DVs following the

equations:

x

t+1

= x

t

+ NR

t

·AP(C

t

, v

t+1

) (10)

AP(C

t

, v

t+1

) = −Scale·SP.v

t+1

ij

(11)

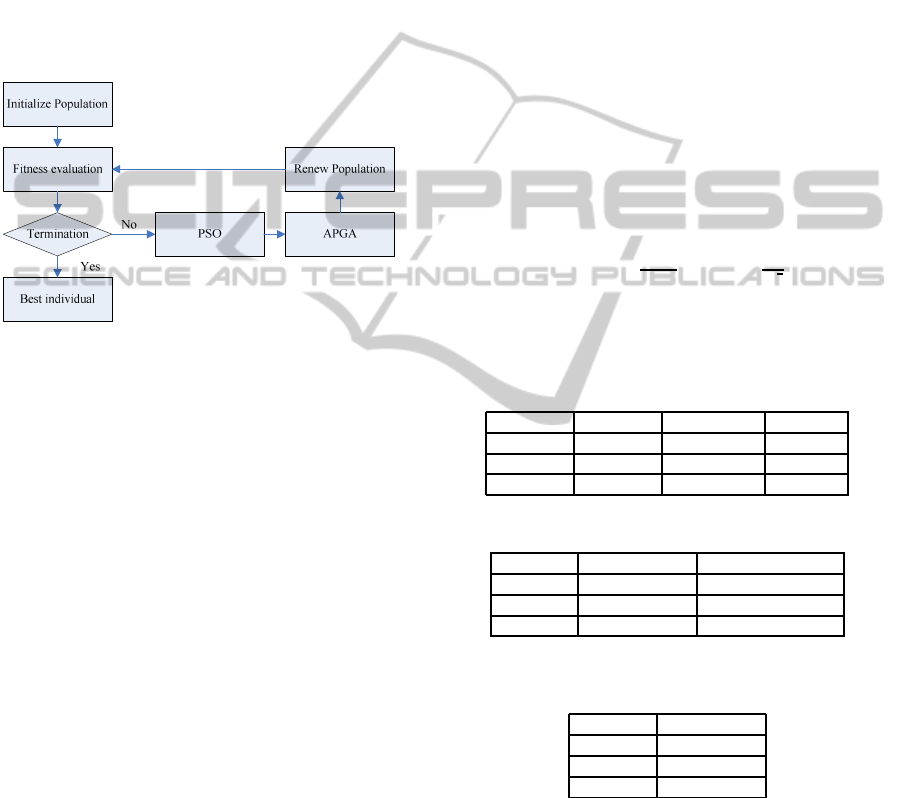

The flowchart of Type 2 is shown in Figure 5. In this

approach, PSO and APGA run individually. The iter-

ation is run by PSO operator and the velocity update

following (1) is given as initial parameter of AP for

APGA process.

Figure 5: H-PSOGA Type 2.

4.2 The Roles of Type 1 and Type 2

As described above, the individuals which compose

of Type 1 play the role of searching intensively around

of gbest, to parallel with APGA that combines global

search and local search, and the whole swarm is stabi-

lized. On the other hand, that of Type 2 play the role

of searching globally in the solution space by getting

the direction from PSO operator adjusted into APGA,

and the whole swarm is un-stabilized.

We can say that Type 1 achieves the intensifica-

tion, and Type 2 diversification with the aim to reduce

the calculation cost and improve the convergence to

the optimal solution.

5 NUMERICAL EXPERIMENTS

The numerical experiments are first performed to

compare among methods. To show the effects of these

methods whether particles can escape from a local op-

timal solution and find the global optimal solution,

we compare with other methodologies for the robust-

ness of the optimization process. These experiments

are performed 20 trials for every function. The ini-

tial seed number is randomly varied during everytrial.

In each experiment, the GA parameters used in solv-

ing benchmark functions are set as follows: selection

ratio, crossover ratio and mutation ratio are 1.0, 0.8

and 0.01 respectively. The population size is 50 indi-

viduals and the terminal generation is 5000th genera-

tion. The sensitivity plan parameters in (8) and (11)

for normalizing functions are listed in Table 3. The

inertia weight w is adopted by LDIW method with

w

max

, w

min

is respectively set 0.9, 0.4, and the accel-

eration coefficients c

1

and c

2

are set by fixed value of

2.0.

5.1 Benchmark Functions

For the H-PSOGA, we estimate the stability of the

convergence to the optimal solution by using three

benchmark functions with 20 dimensions Rastrigin

(RA), Griewank (GR) and Rosenbrock (RO).

These functions are given as follows:

RA = 10n+

n

∑

i=1

{x

2

i

−10cos(2πx

i

)} (12)

GR = 1+

n

∑

i=1

x

2

i

4000

−

n

∏

i=1

cos

x

i

√

i

(13)

RO =

n

∑

i=1

100(x

i+1

+ 1−(x

i

+ 1)

2

)

2

+ x

2

i

(14)

Table 1: Characteristics of the benchmark functions.

Function Epistasis Multi-peak Steep

RA No Yes Average

GR Yes Yes Small

RO Yes No Big

Table 2: Design range, digits of DVs.

Function Design range Number of digits

RA No 2

GR Yes 1

RO Yes 3

Table 3: Scale factor for normalizing the benchmark func-

tions.

Function Scale factor

RA 10.0

GR 100.0

RO 4.0

Table 1 shows their characteristics, and the terms

epistasis, multi-peak, steep denote the dependence re-

lation of the DVs, presence of multi-peak and level of

steepness, respectively. All functions are minimized

to zero, when optimal DVs X = 0 are obtained. More-

over, it is difficult to search for their optimal solutions

by applying one optimization strategy only, because

each function has a different complicated characteris-

tic. In Table 2, their design range, the digits of DVs

HYBRID APPROACH FOR IMPROVED PARTICLE SWARM OPTIMIZATION USING ADAPTIVE PLAN SYSTEM

WITH GENETIC ALGORITHM

271

are summarized. If the search point attains an opti-

mal solution or a current generation process reaches

the termination generation, the search process is ter-

minated.

5.2 Experiment Results

The experiment results are shown in Table 4. In the

table, when success rate of optimal solution is not

100%, ”-” is described. By H-PSOGA Type 1, the

solutions of all benchmark function with 20 dimen-

sions reach their global optimum solutions. However,

H-PSOGA Type 2 could not reach the optimum solu-

tion with RO function because of the communication

between PSO operator and APGA. As a result, we can

confirm that Type 1 converged faster than Type 2.

H-PSOGA Type 1 converged similar to APGA

process with high fitness value. APGA process could

arrive at a global optimum with a high probability and

PSO operator converged faster with RO function but

could not reach with RA function.

From the result shown in Table 4, we employed

H-PSOGA Type 1 for H-PSOGA model.

To sum up, it validity confirms that this hybrid

strategy can reduce the computation cost and improve

the stability of the convergence to the optimal solu-

tion.

Table 4: Num. of generations with 20 dimensions.

Function Basic PSO H-PSOGA H-PSOGA

Type 1 Type 2

RA - 202 405

GR 2878 344 538

RO 2220 1353 -

5.3 Comparison

H-PSOGA was compared with basic PSO. PSO al-

gorithm converged the optimum solution with GR

and RO function but did not reach with RA function.

However the number of iterations is still large. The

results of basic PSO are shown in Table 4.

This method was better than basic PSO in all

benchmark functions, and it converged the global op-

timal solution with a high probability. Therefore, it is

desirable to introduce APGA for improved PSO.

In particular, it was confirmed that the computa-

tional cost with these method could be reduced for

benchmark functions. And it showed that the conver-

gence to the optimal solution could be improve more

significantly.

Overall, the H-PSOGA was capable of attaining

robustness, high quality, low calculation cost and ef-

ficient performance on many benchmark problems.

6 CONCLUSIONS

In this paper, to overcome the weak point of PSO

that particles cannot escape from a local optimal so-

lution, and to achieve the global search for the solu-

tion space of multi-peak optimization problems with

multi-dimensions, we proposed the new hybrid ap-

proach, H-PSOGA. Then, we verified the effective-

ness of H-PSOGA by the numerical experiments per-

formed three benchmark functions. The obtained

points are shown below

The search ability of H-PSOGA with the multi-

dimensions optimization problems is very effective,

compared with that of basic PSO or GA.

Both types of H-PSOGA achieved at the opti-

mal solution, however they have strengths and weak-

nesses. The key is how to communicate between PSO

and APGA, it is a future work.

Finally, this study plans to do a comparison with

the sensitivity plan of AP by applying other optimiza-

tion methods into AP and optimizing some kinds of

benchmark functions.

REFERENCES

Goldberg, D. E. (1989). Genetic Algorithms in Search Op-

timization and Machine Learning. Addison-Wesley.

Hasegawa, H. (2007). Adaptive plan system with genetic al-

gorithm based on synthesis of local and global search

method for multi-peak optimization problems. In 6th

EUROSIM Congress on Modelling and Simulation.

Kennedy, J. and Eberhart, R. (2001). Swarm Intelligence.

Morgan Kaufmann Publishers.

Pham Ngoc Hieu, H. H. (2010). Hybrid neighborhood con-

trol method of adaptive plan system with genetic algo-

rithm. In 7th EUROSIM Congress on Modelling and

Simulation.

Shi, Y. and Eberhart, R. C. (1998). A modified particle

swarm optimizer. In IEEE World Congr. Comput. In-

tell.

Smith, J. E., Hart, W. E., and Krasnogor, N. (2005). Recent

Advances in Memetic Algorithms. Springer.

Sousuke Tooyama, H. H. (2009). Adaptive plan system

with genetic algorithm using the variable neighbor-

hood range control. In IEEE Congress on Evolution-

ary Computation (CEC 2009).

ECTA 2011 - International Conference on Evolutionary Computation Theory and Applications

272