ROBOT SKILL SYNTHESIS THROUGH HUMAN VISUO-MOTOR

LEARNING

Humanoid Robot Statically-stable Reaching and In-place Stepping

Jan Babiˇc, Blaˇz Hajdinjak

Joˇzef Stefan Institute, Ljubljana, Slovenia

Erhan Oztop

ATR Computational Neuroscience Laboratories, JST-ICORP Computational Brain Project

NICT Biological ICT Group, Kyoto, Japan

Keywords:

Humanoid robot, Skill synthesis, Visuo-motor learning, Radial basis functions.

Abstract:

To achieve a desirable motion of the humanoid robots we propose a framework for robot skill-synthesis that is

based on human visuo-motor learning capacity. The basic idea is to consider the humanoid robot as a tool that

is intuitively controlled by a human demonstrator. Once the effortless control of the humanoid robot has been

achieved, the desired behavior of the humanoid robot is obtained through practice. The successful execution

of the desired motion by the human demonstrator is afterwards used for the design of motion controllers that

operate autonomously. In the paper we describe our idea by presenting a couple of robot skills obtained by the

proposed framework.

1 INTRODUCTION

If robots could be able to imitate human motion

demonstrated to them, acquiring complex robot mo-

tions and skills would become very straightforward.

One can capture the desired motion of a human sub-

ject and map this motion to the kinematical structure

of the robot. Due to the different dynamical properties

of the humanoid robot and the human demonstrator,

the success of this approach with regard to the stabil-

ity of the humanoid robot depends on the ad-hoc map-

ping implemented by the researcher (Schaal, 1999).

Here we propose a very different approach where we

use the human demonstrators real-time action to con-

trol the humanoid robot and to consecutively build an

appropriate mapping between the human and the hu-

manoid robot. This effectively creates a closed loop

system where the human subject actively controls the

humanoid robot motion in real time with the require-

ment that the robot stays stable. This requirement can

be easily satisfied by the human subject because of

the human brain ability to control novel tools (Oz-

top et al., 2006; Goldenberg and Hagmann, 1998).

The robot that is controlled by the demonstrator can

be considered as a tool such as a car or a snowboard

when one uses it for the first time. This setup requires

the humanoid robots state to be transferred to the hu-

man as the feedback information.

The proposed closed-loop approach exploits the

human capability of learning to use novel tools in or-

der to obtain a motor controller for complex motor

tasks. The construction of the motor controller has

two phases. In the first phase a human demonstra-

tor performs the desired task on the humanoid robot

via an intuitive interface. Subsequently in the sec-

ond phase the obtained motions are acquired through

machine learning to yield an independent motor con-

troller. The two phases are shown on Figure 1.

Figure 1: (A) Human demonstrator controls the robot in

closed loop and produces the desired trajectories for the tar-

get task. (B) These signals are used to synthesize a con-

troller for the robot to perform this task autonomously.

212

Babi

ˇ

c J., Hajdinjak B. and Oztop E. (2010).

ROBOT SKILL SYNTHESIS THROUGH HUMAN VISUO-MOTOR LEARNING - Humanoid Robot Statically-stable Reaching and In-place Stepping.

In Proceedings of the 7th International Conference on Informatics in Control, Automation and Robotics, pages 212-215

DOI: 10.5220/0002937502120215

Copyright

c

SciTePress

In the following sections, we present two example

skills that were obtained by the described framework.

2 STATICALLY STABLE

REACHING

The proposed approach can be considered as a closed

loop approach where the human demonstrator is ac-

tively included in the main control loop as shown on

Figure 2. The motion of the human demonstrator was

acquired by the contact-less motion capture system.

The joint angles of the demonstrator were fed for-

ward to the humanoid robot in real-time. In effect,

the human acted as an adaptive component of the con-

trol system. During such control, a partial state of

the robot needs to be fed back to the human subject.

For statically balanced reaching skill, the feedback we

used was the rendering of the position of the robot’s

centre of mass superimposed on the support polygon

of the robot which was presented to the demonstra-

tor by means of a graphical display. During the ex-

periment the demonstrator did not see the humanoid

robot.

Figure 2: Closed-loop control of the humanoid robot. Mo-

tion of the human is transfered to the robot while the robot’s

stability is presented to the human by a visual feedback.

The demonstrator’s task was to keep the center of

mass of the humanoid robot within the support poly-

gon while performing the reaching movements as di-

rected by the experimenter. With a short practice

session the demonstrator was able to move his body

and limbs with the constraint that the robot’s cen-

ter of mass was within the support polygon. Hence

the robot was statically stable when the demonstrator

generated motions were either imitated by the robot

in real-time or played back later on the robot. The

robot used in the study was Fujitsu HOAP-II small

humanoid robot.

The motion of the humanoid robot was con-

strained to the two dimensions; only the vertical axis

and the axis normal to the trunk were considered.

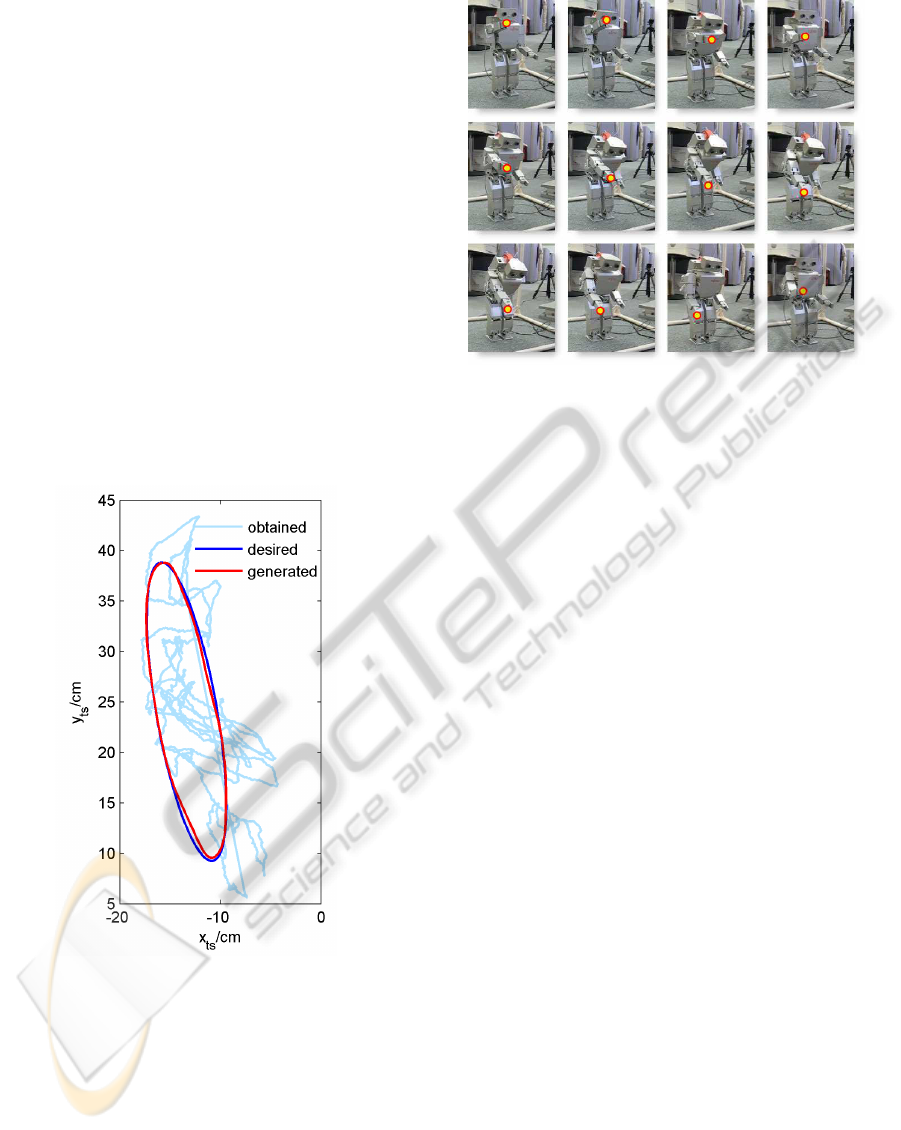

The light wiggly curve on Figure 3 shows the robot

end-effector position data which was generated by the

demonstrator. One can imagine the humanoid robot

from its left side standing with the tips of the feet at

the centre of the coordinate frame and reaching out

outwards with its right hand gliding over the curve.

The long straight segment of the curve connects the

beginning and the end of the reaching motion.

For each data point of the obtained end-effector

trajectory, the robot joint angles were recorded. As-

suming rows of the humanoid robot end-effector posi-

tion X is formed by the data points taken from the ob-

tained end-effector trajectory and the robot joint an-

gles Q is formed by the corresponding joint angles

we get a non-linear relation of the form

Q = Γ(X) W. (1)

By performing a non-linear data fit and solving for W

we can afterwards make prediction with

q

pred

= Γ(x

des

) W (2)

where q

pred

is a vector of the predicted joint angles

and x

des

is a vector of the desired end-effector po-

sition. Using the prediction we can afterwards ask

the humanoid robot to reach out for a desired position

without falling over.

For non-linear data fitting the recorded positions

X are mapped into an N dimensional space using the

Gaussian basis functions given by

ϕ

i

(x) = e

x−µ

i

σ

2

(3)

where µ

i

and σ

2

are open parameters to be deter-

mined. Each row of X in converted into an N dimen-

sional vector forming a data matrix

Z = Γ(X) =

ϕ

1

(x

1

) ϕ

2

(x

1

) . . . ϕ

N

(x

1

)

ϕ

1

(x

2

) ϕ

2

(x

2

) . . . ϕ

N

(x

2

)

.

.

.

.

.

.

.

.

.

.

.

.

ϕ

1

(x

m

) ϕ

2

(x

m

) . . . ϕ

N

(x

m

)

.

(4)

Assuming we have a linear relation between the rows

of Z and Q, we can solve Eq. (2) for W in the sense

of the minimum least squares by

W = Z

+

Q (5)

where X

+

represents the pseudo-inverse of X. The

residual error is given by

tr

(XW− Q) (XW− Q)

T

. (6)

In effect, this establishes a non-linear data fit; given

a desired end-effector position x, the joint angles that

would achieve this position are given by

q

pred

=

ϕ

1

(x

des

) ϕ

2

(x

des

) . . . ϕ

N

(x

des

)

W.

(7)

ROBOT SKILL SYNTHESIS THROUGH HUMAN VISUO-MOTOR LEARNING - Humanoid Robot Statically-stable

Reaching and In-place Stepping

213

The open parameters are N as the number of ba-

sis functions which implicitly determines µ

i

and the

variance σ

2

. They were determined using cross-

validation. We prepared a Cartesian desired trajectory

that was not a part of the recording data set and con-

verted it into a joint trajectory with the current set val-

ues of (N, σ

2

). The joint trajectory was simulated on

a kinematical model of the humanoid robot producing

an end-effector trajectory. The deviation of the resul-

tant trajectory from the desired trajectory was used as

a measure to choose the values of the open parame-

ters.

Figure 3 shows the desired end-effector trajectory

and the generated end-effector trajectory obtained by

playing back the predicted joint angle trajectories

on the humanoid robot. The light wiggly curve on

Figure 3 represents the end-effector trajectory that

was generated by the human demonstrator in the first

phase and subsequently used to determine the map-

ping W between the joint angles and the end-effector

position.

Figure 3: The obtained end-effector trajectory generated by

the demonstrator (light wiggly curve) with the desired end-

effector trajectory that was used as the input for the joint

angle prediction and the generated end-effector trajectory

obtained by playing back the predicted joint angle trajecto-

ries on the humanoid robot.

The reaching skill of the humanoid robot we ob-

tained was statically stable which means that the

robot’s centre of mass was inside the robot’s support

polygon. A sequence of video frames representing the

statically stable autonomous trajectory tracking ob-

tained with our method is shown on Figure 4.

Figure 4: Video frames representing the statically stable

reaching motion of the humanoid robot obtained with the

proposed approach.

3 IN-PLACE STEPPING

In this section, we present our preliminary work on

performing a statically stable in-place stepping of the

humanoid robot. In-place stepping is a task that re-

quires an even stricter balance control than the reach-

ing experiment described in the previous section. In

order for the humanoid robot to lift one of its feet dur-

ing the statically stable in-place stepping, the robot’s

centre of mass needs to be shifted to the opposite leg

before the lifting action occurs. As the robot’s cen-

tre of mass is relatively high and the foot is relatively

small, it is crucial that the position of the centre of

mass of the robot can be precisely controlled. For

humans, to maintain the postural stability is a very

intuitive task. If one perturbs the posture of a hu-

man, he/she can easily and without any concious ef-

fort move the body to counteract the posture pertur-

bations and to stay in a balanced posture. The main

principle of our approach is to use this natural capabil-

ity of humans to maintain the postural stability of the

humanoid robots. In order to do so, we designed and

manufactured an inclining parallel platform on which

a human demonstrator is standing during the closed-

loop motion transfer (Figure 5).

Instead of using visual information for the robot’s

stability as previously explained in the reaching ex-

periment, the state of the humanoid robot’s postural

stability is feed-back to the human demonstrator by

the inclining parallel platform. When the humanoid

robot is statically stable, the platform stays in a hor-

izontal position. On the contrary, when the centre of

mass of the robotleaves its support polygonand there-

ICINCO 2010 - 7th International Conference on Informatics in Control, Automation and Robotics

214

fore becomes statically unstable, the platform moves

in a way that puts the human demonstrator standing

on the platform in an unstable state that is directly

comparable to the instability of the humanoid robot.

The human demonstrator is forced to correct his/her

Figure 5: Inclining parallel platform that can rotate around

all three axes. The diameter of the platform is 0.7m and is

able to carry an adult human.

balance by moving the body. Consecutively, as the

motion of the human demonstrator is fed-forward

to the humanoid robot in real-time, the humanoid

robot gets back to the stable posture together with the

demonstrator. Using some practice, human demon-

strators easily learned how to perform in-place step-

ping on the humanoid robot. The obtained trajecto-

ries can afterwards be used to autonomously control

the in-place stepping of the humanoid robot. Our fu-

ture plans are to extend this approach and use it for

acquiring walking of the humanoid robots. Figure 6

shows the human demonstrator and Fujitsu Hoap-3

humanoid robot during the in-place stepping experi-

ment.

Figure 6: The human demonstrator and Fujitsu Hoap-3 hu-

manoid robot are shown during the in-place stepping exper-

iment. The video frame on the left side shows the human

demonstrator performing in-place stepping on the inclining

parallel platform. The right side frame shows the humanoid

robot during the one foot posture.

4 CONCLUSIONS

A goal of imitation of motion from demonstration is

to remove the burden of robot programming from the

experts by letting non-experts to teach robots. The

most basic method to transfer a certain motion from

a demonstrator to a robot would be to directly copy

the motor commands of the demonstrator to the robot

(Atkeson et al., 2000) and to modify the motor com-

mands accordingly to the robot using a sort of a local

controller. Our approach is different in the sense that

the correct motor commands for the robot are pro-

duced by the human demonstrator. For this conve-

nience, the price one has to pay is the necessity of

training to control the robot to achieve the desired

action. Basically, instead of expert robot program-

ming our method relies on human visuo-motor learn-

ing ability to produce the appropriate motor com-

mands on the robot, which can be played back later

or used to obtain controllers through machine learn-

ing methods as in our case of reaching.

The main result of our study is the establishment

of the methods to synthesize the robot motion using

human visuo-motor learning. To demonstrate the ef-

fectiveness of the proposed approach, statically stable

reaching and in-place stepping was implemented on a

humanoid robot using the introduced paradigm.

ACKNOWLEDGEMENTS

The research work reported here was made possible

by Japanese Society for promotion of Science and

Slovenian Ministry of Higher Education, Science and

Technology.

REFERENCES

Atkeson, C., Hale, J., Pollick, F., Riley, M., Kotosaka, S.,

Schaal, S., Shibata, S., Tevatia, T., Ude, A., Vijayaku-

mar, S., and Kawato, M. (2000). Using humanoid

robots to study human behavior. IEEE Intelligent Sys-

tems, 15:45–56.

Goldenberg, G. and Hagmann, S. (1998). Tool use and me-

chanical problem solving in apraxia. Neuropsychol-

ogy, 36:581–589.

Oztop, E., Lin, L.-H., Kawato, M., and Cheng, G. (2006).

Dexterous skills transfer by extending human body

schema to a robotic hand. In IEEE-RAS International

conference on humanoid robotics.

Schaal, S. (1999). Is imitation learning the route to hu-

manoid robots? Trends Cogn Sci, 3:233–242.

ROBOT SKILL SYNTHESIS THROUGH HUMAN VISUO-MOTOR LEARNING - Humanoid Robot Statically-stable

Reaching and In-place Stepping

215