HIERARCHICAL CONDITIONAL RANDOM FIELD FOR

MULTI-CLASS IMAGE CLASSIFICATION

Michael Ying Yang, Wolfgang F

¨

orstner

Department of Photogrammetry, Bonn University, Bonn, Germany

Martin Drauschke

Institute for Applied Computer Science, Bundeswehr University Munich, Munich, Germany

Keywords:

Multi-class image classification, Hierarchical conditional random field, Image segmentation, Region adja-

cency graph, Region hierarchy graph.

Abstract:

Multi-class image classification has made significant advances in recent years through the combination of

local and global features. This paper proposes a novel approach called hierarchical conditional random field

(HCRF) that explicitly models region adjacency graph and region hierarchy graph structure of an image. This

allows to set up a joint and hierarchical model of local and global discriminative methods that augments

conditional random field to a multi-layer model. Region hierarchy graph is based on a multi-scale watershed

segmentation.

1 INTRODUCTION

In recent years an increasingly popular way to solve

various image labeling problems like object segmen-

tation, stereo and single view reconstruction is to for-

mulate them using image regions obtained from un-

supervised segmentation algorithms. These methods

are inspired from the observation that pixels constitut-

ing a particular region often have the same label. For

instance, they may belong to the same object or may

have the same surface orientation. This approach has

the benefit that higher order features based on all the

pixels constituting the region can be computed and

used for classification. Further, it is also much faster

as inference now only needs to be performed over a

small number of regions rather than all the pixels in

the image.

Classification of image regions in meaningful cat-

egories is a challenging task due to the ambiguities

inherent to visual data. On the other hand, image data

exhibit strong contextual dependencies in the form of

spatial interactions among components. It has been

shown that modeling these interactions is crucial to

achieve good classification accuracy, (cf. Section 2).

Conditional random fields (CRFs) have been pro-

posed as a principled approach to modeling the in-

teractions between labels in such problems using the

tools of graphical models (Lafferty et al., 2001). A

conditional random field is a model that assigns a

joint probability distribution over labels conditioned

on the input, where the distribution respects the in-

dependence relations encoded in a graph. In general,

the labels are not assumed to be independent, nor are

the observations conditionally independent given the

labels, as assumed in generative models such as hid-

den Markov models. The CRF framework has already

been used to obtain promising results in a number

of domains where there are interactions between la-

bels, including tagging, parsing and information ex-

traction in natural language processing (McCallum

et al., 2003) and the modeling of spatial dependencies

in image interpretation (Kumar and Hebert, 2003).

One problem with the methods using low-level

features in image classification is that it is often diffi-

cult to generalize these methods to diverse image data

beyond the training set. More importantly, they lack

semantic image interpretation that is valuable in deter-

mining the class labeling. Contents such as the pres-

ence of people, sky, grass, etc., may be used as cues

for improving the classification performance obtained

by low-level features alone.

This paper presents a proposal of a CRF that si-

multaneously models the region adjacency graph and

the region hierarchy graph structure. This allows to

464

Ying Yang M., Förstner W. and Drauschke M. (2010).

HIERARCHICAL CONDITIONAL RANDOM FIELD FOR MULTI-CLASS IMAGE CLASSIFICATION.

In Proceedings of the International Conference on Computer Vision Theory and Applications, pages 464-469

DOI: 10.5220/0002877404640469

Copyright

c

SciTePress

set up a joint and hierarchical model of local and

global discriminative methods that augments CRF to

a multi-layer model.

The contributions of this paper are the following.

First, we extend classical one-layer CRF to multi-

layer CRF while restricting to second-order cliques.

Second, this work shows how to integrate local and

global information in a powerful model. The paper

is organized as follows: Section 2 introduces related

work. Section 3 gives the basic theory of CRF. Sec-

tion 4 presents pairwise CRF model by incorporating

novel hierarchical pairwise potentials.

2 RELATED WORK

There are many recent works on multi-class image

classification that address the combination of global

and local features (He et al., 2004; Yang et al., 2007;

Reynolds and Murphy, 2007; Gould et al., 2008; Toy-

oda and Hasegawa, 2008; Plath et al., 2009; Schnitzs-

pan et al., 2009). They showed promising results and

specifically improved performance compared to mak-

ing use of only one type of features - either local or

global.

(He et al., 2004) proposed a multi-layer CRF

to account for global consistency and due to that

showed improved performance. The authors intro-

duce a global scene potential to assert consistency

of local regions. Thereby, they were able to benefit

from integrating the context of a given scene. How-

ever, their model works with global priors set in ad-

vance and only uses learned local classifiers. Rather

than to rely on priors alone, in our work, all param-

eters of the layers are trained jointly. (Yang et al.,

2007) proposed a model that combines appearance

over large contiguous regions with spatial informa-

tion and a global shape prior. The shape prior pro-

vides local context for certain types of objects (e.g.,

cars and airplanes), but not for regions representing

general objects (e.g., animal, building, sky and grass).

In contrast to this, we explicitly model hierarchical

graph structure of an image, capturing long range de-

pendencies. (Gould et al., 2008) proposed a method

for capturing global information from inter-class spa-

tial relationships and encoding it as a local feature.

(Toyoda and Hasegawa, 2008) presented a proposal

of a general framework that explicitly models local

and global information in a conditional random field.

Their method resolves local ambiguities from a global

perspective using global image information. It en-

ables locally and globally consistent image recogni-

tion. But their model needs to train on the whole

training data simultaneously to obtain the global po-

tentials, which results in high computational time.

Besides the above approaches, there are more

popular methods to solve multi-class classification

problem using higher order conditional random fields

(Kohli et al., 2007; Kohli et al., 2009; Ladicky et al.,

2009). (Kohli et al., 2007) introduced a class of higher

order clique potentials called P

n

Potts model. Higher

order clique potentials have the capability to model

complex interactions of random variables, making

them able to capture better the rich statistics of natural

scenes. The higher order potential functions proposed

in (Kohli et al., 2009) take the form of the Robust

P

n

model, which is more general than the P

n

Potts

model. (Ladicky et al., 2009) generalized Robust P

n

model to P

n

based hierarchical CRF model. Infer-

ence in these models can be performed efficiently us-

ing graph cut based move making algorithms. How-

ever, the work on solving higher order potentials us-

ing move making algorithms has targeted particular

classes of potential functions. Developing efficient

large move making for exact and approximate mini-

mization of general higher order energy functions is a

difficult problem. Parameter learning for higher order

CRF is also a challenging problem.

Recent work by (Plath et al., 2009) comprises

two aspects for coupling local and global evidences

both by constructing a tree-structured CRF on im-

age regions on multiple scales, which largely fol-

lows the approach of (Reynolds and Murphy, 2007),

and using global image classification information.

Thereby, (Plath et al., 2009) neglects direct local

neighborhood dependencies, which our model learns

jointly with long range dependencies. Most similar

to us is the work of (Schnitzspan et al., 2008) who

explicitly attempt to combine the power of global

feature-based approaches with the flexibility of lo-

cal feature-based methods in one consistent frame-

work. Briefly, (Schnitzspan et al., 2008) extend clas-

sical one-layer CRF to multi-layer CRF by restrict-

ing pairwise potentials to 4-neighborhood model and

introducing higher-order potentials between different

layers. There are several important differences with

respect to our work. First, rather than 4-neighborhood

graph model in (Schnitzspan et al., 2008), we build re-

gion adjacency graph based on watershed image par-

tition, which leads to a irregular graph structure. Sec-

ond, we apply an irregular pyramid to represent dif-

ferent layers, while (Schnitzspan et al., 2008) use a

regular pyramid structure. Finally, our model only ex-

ploits up to second-order cliques, which makes learn-

ing and inference much easier. While (Schnitzspan

et al., 2008) introduce higher-order potentials to rep-

resent interactions between different layers.

HIERARCHICAL CONDITIONAL RANDOM FIELD FOR MULTI-CLASS IMAGE CLASSIFICATION

465

3 PRELIMINARIES

We start by providing the basic notation used in the

paper. Let the image X be given. It is described by a

set of regions with indices i collected in the set R =

{i}.

They are possibly overlapping and not necessarily

covering the image region. Multi-class image classifi-

cation is the task of assigning a class label l

i

∈ C with

C =

{

1,...,C

}

to each region i.

Let G = (R,E) be the graph over regions where

E is the set of (undirected) edges between adjacent

regions. Note that, unlike standard CRF-based clas-

sification approaches that rely directly on pixels, e.g.,

(Shotton et al., 2006), this graph does not conform to

a regular grid pattern, and, in general, each image will

induce a different graph structure.

The conditional distribution of a classification for

a given image has the commonly general form

P(L | X) =

1

Z

exp

∑

i∈R

f

i

(l

i

| X) +

∑

(i, j)∈N

f

i j

(l

i

,l

j

| X)

!

(1)

where L = {l

i

}

i∈R

represent the labeling of all re-

gions, N is the set of neighbored regions , and Z is the

partition function for normalization. The unary po-

tential f

i

represents relationships between labels and

local image features. The pairwise potential f

i j

repre-

sents relationships between labels of neighboring re-

gions.

The unary potential f

i

measures the support of the

image X for label l

i

of region i. Various local image

features are useful to characterize the regions. For ex-

ample, the CRF in (Shotton et al., 2006) uses shape-

texture, color, and location features. The pairwise po-

tential f

i j

represents compatibility between neighbor-

ing labels given the image X. E. g. if neighboring re-

gions have similar image features, f

i j

favors the same

class label for them. Then, if the regions have dissim-

ilar features, they might be assigned different class

labels. Thus, the pairwise potential f

i j

supports data-

dependent smoothing.

4 HCRF: HIERARCHICAL

CONDITIONAL RANDOM

FIELD

While global detectors have been shown to achieve

impressive results in image classification for unoc-

cluded image scene, part-based approaches tend to

be more successful in dealing with partial occlusion.

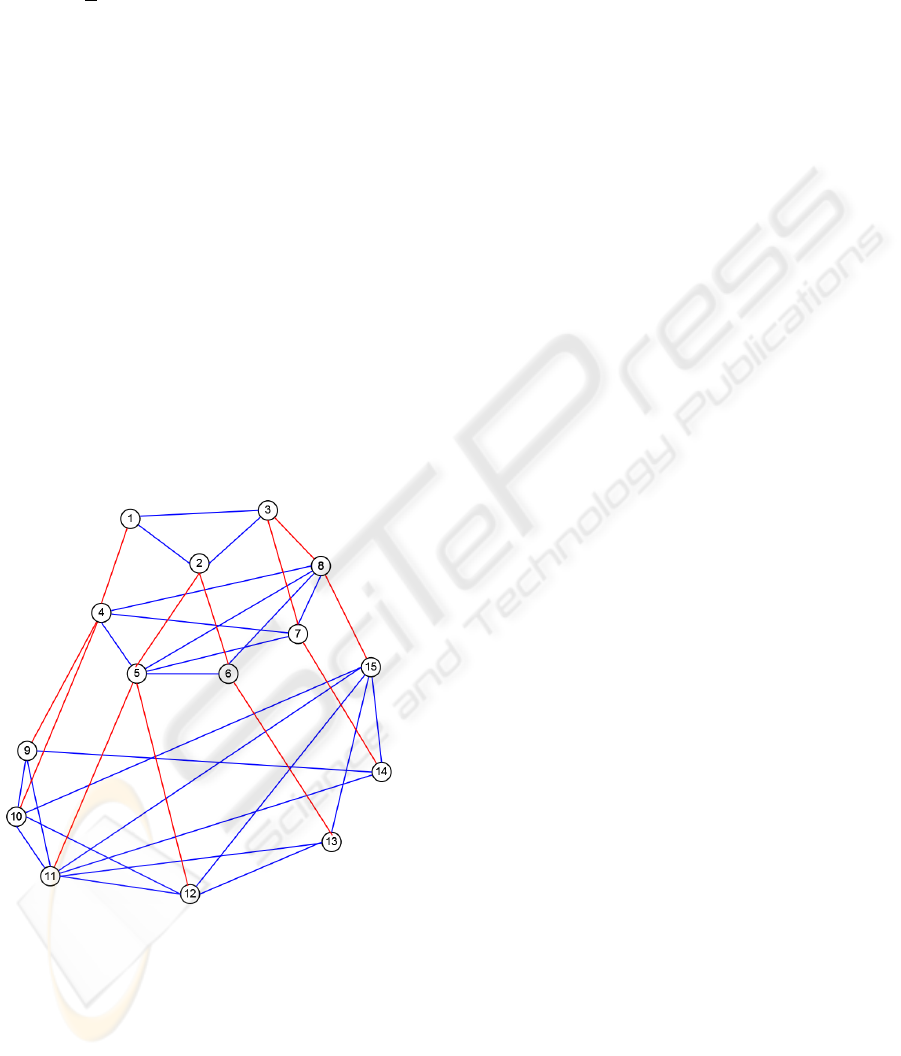

Figure 1: Simulated segmentations at three scales

(left), with corresponding region hierarchical graph (right)

(Reynolds and Murphy, 2007). Scale 1 is at the bottom,

scale 3 at the top. Same color and number indicate same

region in each scale.

Since adjacent regions in images are not indepen-

dent from each other, CRF models these dependen-

cies directly by introducing pairwise potentials. How-

ever, standard CRF works on a very local level and

long range dependencies are not addressed explic-

itly in simple CRF models. Therefore, our approach

tries to set up a joint and hierarchical model of lo-

cal and global information which explicitly models

region adjacency graph (RAG) and region hierarchy

graph (RHG) which is derived from a multi-scale im-

age segmentation.

4.1 Proposed Model

Standard CRF acts on a local level and represents

a single view on the data typically represented with

unary and pairwise potentials. In order to overcome

those local restrictions, we analyze the image at multi-

ple scales s ∈

{

1,...,S

}

with associated scale-specific

unary potentials f

s

i

and pairwise potentials f

s

i j

, to en-

hance the model by evidence aggregation on local to

global level. Furthermore, we integrate pairwise po-

tentials g

s

ik

to regard the hierarchical structure of the

regions, i.e. if i ∈ R

s

then k ∈ R

s+1

. In Figure 1, we

present a segmented image at three scales and the cor-

responding connectivity between the regions of suc-

cessive scales. We see that regions that are too small

to be classified accurately can inherit the labels of

their parents. E. g. region 11 and 12 may be too small

to reliably classify in isolation, but when they inherit

a message from their parent region 5, they may possi-

bly be correctly classified as ’cow’.

The proposed method explicitly models region ad-

jacent neighborhood information within each scale or

layer with f

s

i j

and region hierarchical information be-

tween the scales with g

s

ik

, using global image features

as well as local ones for observations in the model.

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

466

It has a distribution of the form

P(L | X) =

1

Z

exp

S

∑

s=1

∑

i∈R

s

f

s

i

(l

i

| X)

+

S

∑

s=1

∑

(i, j)∈N

s

f

s

i j

(l

i

,l

j

| X) +

S−1

∑

s=1

∑

(i,k)∈H

s

g

s

ik

(l

i

,l

k

| X)

!

(2)

where R

s

is the indexing set for regions corresponding

to scale s, N

s

is the set of neighboring regions at scale

s, and H

s

is the set of parent child relations between

regions in neighboring scales s and s + 1. Note that

we use the same Z as the partition function for nor-

malization as in standard CRF, although the value is

different. We denote this model as Hierarchical Con-

ditional Random Field (HCRF).

The proposed full graphical model is illustrated in

Figure 2. Note that this model only exploits up to

second-order cliques, which makes learning and in-

ference much easier. This model combines different

views on the data by scale-specific potentials and the

hierarchical structure accounting for longer range de-

pendencies.

Figure 2: Illustration of the HCRF model architecture. The

number of the nodes correspond to the regions in Figure 1.

The blue edges between the nodes represent the neighbor-

hoods at one scale, the red edges represent the hierarchical

relation between regions.

4.1.1 Unary Potentials

The local unary potentials f

s

i

independently predict

the label l

i

based on the image X:

f

s

i

(l

i

| X) = log P

s

(l

i

| X). (3)

The label distribution P

s

(l

i

| X) is calculated by using

a classifier. We employ the multiple logistic regres-

sion model,

P

s

(l

i

= c | u

s

ic

) = exp(u

s

ic

)/

∑

c

0

exp(u

s

ic

0

), (4)

where u

s

ic

= w

sT

c

h

s

i

, w

s

c

= [w

s

0

,w

s

1

,...,w

s

M

] are M + 1

unknown parameters per class, and the feature vector

h

s

i

= [1,h

s

i1

,...,h

s

im

,...,h

s

iM

]

T

contains M features for

each region i derived from the image X. The weights

w

s

=

{

w

s

c

}

c=1,...,C

are the model parameters.

4.1.2 Pairwise Potentials

The local pairwise potentials f

s

i j

describe category

compatibility between neighboring labels l

i

and l

j

given the image X, which take the form of a contrast

sensitive Potts model:

f

s

i j

(l

i

,l

j

| X) = v

sT

µ

s

i j

δ(l

i

6= l

j

) (5)

where the feature function µ

s

i j

relate to the pair of re-

gions (i, j), and the weights v

s

again are the model

parameters.

The hierarchical pairwise potentials g

s

ik

also de-

scribe category compatibility between hierarchically

neighboring labels l

i

and l

k

given the image X, which

take the form of a contrast sensitive Potts model:

g

s

ik

(l

i

,l

k

| X) = r

sT

η

s

ik

δ(l

i

6= l

k

) (6)

where the feature function η

s

ik

relate to the hierarchi-

cal pairs of regions (i,k), and the vector r

s

contains

the model parameters. We denote the unknown HCRF

model parameters by θ =

{

w

s

,v

s

,r

s

}

s=1,...,S

.

4.2 Generating Multi-scale

Segmentations

We now explain how we realized the multi-scale im-

age segmentation and how we generate the region

adjacency graphs (RAG) and region hierarchy graph

(RHG).

We determine the image segmentation from the

watershed boundaries on the image’s gradient magni-

tude. Our approach uses the Gaussian scale-space for

obtaining regions at several scales. The segmentation

procedure has been described in detail by (Drauschke

et al., 2006). For each scale s, we convolve each

image channel with a Gaussian filter and combine

the channels when computing the gradient magnitude.

Since the watershed algorithm is inclined to produce

over-segmentation, we suppress many gradient min-

ima by resetting the gradient value at positions where

HIERARCHICAL CONDITIONAL RANDOM FIELD FOR MULTI-CLASS IMAGE CLASSIFICATION

467

the gradient is below the median of the gradient mag-

nitude. So, those minima are removed, which are

mostly caused by noise. As a result of the water-

shed algorithm, we obtain a complete partitioning of

the image for each scale s, where every image pixel

belongs to exactly one region. Additionally, we deter-

mine the scale-specific RAGs on each image partition.

The development of the regions over several

scales is used to model the RHG. (Drauschke, 2009)

defined a RHG with directed edges between regions

of successive scales (starting at the lower scale). Fur-

thermore, the relation is defined over the maximal

overlap of the regions. This definition of the region

hierarchy leads to a simple RHG. If the edges would

be undirected, the RHG only consists of trees.

4.3 Parameter Learning and Inference

For parameter estimation we take the learning ap-

proach (Sutton and McCallum, 2005) assuming the

parameters of unary potentials to be conditionally in-

dependent of the pairwise potentials’ parameters, al-

lowing separate learning of the unary and the binary

parameters. Note this no longer guarantees to find the

optimal parameter setting for θ. In fact, the parame-

ters are optimized to maximize a lower bound of the

full CRF likelihood function by splitting the model

into disjoint node pairs and integrating statistics over

all of these pairs. Prior to learning the pairwise poten-

tial models we train parameters

{

w

s

}

s=1,...,S

for the

unary potentials. Then, the pairwise potentials’ pa-

rameter sets

{

v

s

}

s=1,...,S

and

{

r

s

}

s=1,...,S

are learned

jointly in a maximum likelihood setting with stochas-

tic meta descent (Vishwanathan et al., 2006). We also

assume a Gaussian prior on the linear weights to avoid

overfitting (Vishwanathan et al., 2006).

We use max-product propagation inference (Pearl,

1988) to estimate the max-marginal over the labels for

each region, and assign each region the label which

maximizes the joint assignment to the image.

4.4 Feature Functions

To complete the details of our method, we now de-

scribe how the feature functions are constructed from

low-level descriptors. They link the potentials to the

actual image evidence and account for local neighbor-

hood and long range dependencies.

Unary feature function h

s

i

is a function of a pre-

defined description vector for each region i at scale

s.

Local pairwise potentials are responsible for mod-

eling local dependencies by supporting or inhibiting

label propagation to the neighboring regions. There-

fore, we define the local pairwise function µ

s

i j

as

µ

s

i j

=

1,{|h

s

im

− h

s

jm

|}

>

(7)

Here, we extended each difference by an offset for

being capable eliminating small isolated regions.

Hierarchical pairwise potentials act as a link

across scale, facilitating propagation of information

in our model. Therefore, we define the hierarchical

pairwise function η

s

ik

as

η

s

ik

=

1,{|h

s

im

− h

s+1

km

|}

>

(8)

where region i is at scale s and region k is at scale

s + 1.

In the following, we give an example of how we

build the description vector for each region mentioned

above in the context of building facade interpretation.

For each region i at the highest resolution, say, at

scale with index 1, we compute an 75-dimensional

description vector φ

1

i

incorporating region area and

perimeter, its compactness and its aspect ratio. For

representing spectral information of the region, we

use same 12 color features as (Barnard et al., 2003):

the mean and the standard deviation of the RGB and

the Lab color spaces. We also include features de-

rived from the gradient histograms as it has been pro-

posed by (Kor

ˇ

c and F

¨

orstner, 2008). Additionally we

use texture features derived from the Walsh transform

(Petrou and Bosdogianni, 1999; Lazaridis and Petrou,

2006). Other features are derived from generaliza-

tion of the region’s border and represent parallelity or

orthogonality of the border segments, or they are de-

scriptors of the Fourier transform.

We define this description vector to be the unary

feature function h

1

i

at scale 1. For the higher scales

s, we compute the description vector φ

s

i

and unary

feature function h

s

i

using the correspondent regions at

lower scales.

We have finished the multi-scale image segmen-

tation and feature extraction on eTRIMS database

1

.

Based on segmented regions, we have generated RAG

and RHG. We are currently working on learning and

inference issues.

5 SUMMARY

In this paper, we have shown a novel approach called

hierarchical conditional random field (HCRF). The

proposed method explicitly models region adjacent

1

http://www.ipb.uni-bonn.de/projects/etrims/

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

468

neighborhood information within each scale and re-

gion hierarchical information between the scales, us-

ing global image features as well as local ones for ob-

servations in the model. This model only exploits up

to second-order cliques, which makes learning and in-

ference much easier. This model combines different

views on the data by layer-specific potentials and the

hierarchical structure accounting for longer range de-

pendencies.

REFERENCES

Barnard, K., Duygulu, P., Freitas, N. D., Forsyth, D., Blei,

D., and Jordan, M. (2003). Matching Words and Pic-

tures. In JMLR, volume 3, pages 1107–1135.

Drauschke, M. (2009). An Irregular Pyramid for Multi-

scale Analysis of Objects and their Parts. In 7th IAPR-

TC-15 Workshop on Graph-based Representations in

Pattern Recognition, pages 293–303.

Drauschke, M., Schuster, H.-F., and F

¨

orstner, W. (2006).

Detectability of Buildings in Aerial Images over Scale

Space. In PCV’06, IAPRS 36 (3), pages 7–12.

Gould, S., Rodgers, J., Cohen, D., Elidan, G., and Koller,

D. (2008). Multi-Class Segmentation with Relative

Location Prior. IJCV, 80(3):300–316.

He, X., Zemel, R., and Carreira-Perpin, M. (2004). Multi-

scale Conditional Random Fields for Image Labeling.

In CVPR, pages 695–702.

Kohli, P., Kumar, M. P., and Torr, P. (2007). P3 & Be-

yond: Solving Energies with Higher Order Cliques.

In CVPR, pages 1–8.

Kohli, P., Ladicky, L., and Torr, P. (2009). Robust Higher

Order Potentials for Enforcing Label Consistency.

IJCV, 82(3):302–324.

Kor

ˇ

c, F. and F

¨

orstner, W. (2008). Interpreting Terrestrial

Images of Urban Scenes using Discriminative Ran-

dom Fields. In 21st ISPRS Congress, IAPRS 37 (B3a),

pages 291–296.

Kumar, S. and Hebert, M. (2003). Discriminative Random

Fields: A Discriminative Framework for Contextual

Interaction in Classification. In ICCV, pages 1150–

1157.

Ladicky, L., Russell, C., and Kohli, P. (2009). Associative

Hierarchical CRFs for Object Class Image Segmenta-

tion. In ICCV, pages 1–8.

Lafferty, J., McCallum, A., and Pereira, F. (2001). Condi-

tional Random Fields: Probabilistic Models for Seg-

menting and Labeling Sequence Data. In ICML, pages

282–289.

Lazaridis, G. and Petrou, M. (2006). Image Registra-

tion using the Walsh Transform. Image Processing,

15(8):2343–2357.

McCallum, A., Rohanimanesh, K., and Sutton, C. (2003).

Dynamic Conditional Random Fields for Jointly La-

beling Multiple Sequences. In NIPS Workshop on Syn-

tax, Semantics and Statistic.

Pearl, J. (1988). Probabilistic Reasoning in Intelligent Sys-

tems. Morgan Kaufmann.

Petrou, M. and Bosdogianni, P. (1999). Image Processing:

The Fundamentals. Wiley.

Plath, N., Toussaint, M., and Nakajima, S. (2009). Multi-

Class Image Segmentation using Conditional Random

Fields and Global Classification. In ICML, pages 817–

824.

Reynolds, J. and Murphy, K. (2007). Figure-ground seg-

mentation using a hierarchical conditional random

field. In 4th Canadian Conference on Computer and

Robot Vision, pages 175–182.

Schnitzspan, P., Fritz, M., Roth, S., and Schiele, B.

(2009). Discriminative Structure Learning of Hier-

archical Representations for Object Detection. In

CVPR, pages 2238–2245.

Schnitzspan, P., Fritz, M., and Schiele, B. (2008). Hier-

archical Support Vector Random Fields: Joint Train-

ing to Combine Local and Global Features. In ECCV,

pages 527–540.

Shotton, J., Winnand, J., Rother, C., and Criminisi, A.

(2006). Textonboost: Joint Appearance, Shape and

Context Modeling for Multi-Class Object Recognition

and Segmentation. In ECCV, pages 1–15.

Sutton, C. and McCallum, A. (2005). Piecewise Training

for Undirected Models. In 21th Ann. Conf. on Uncer-

tainty in AI, pages 568–575.

Toyoda, T. and Hasegawa, O. (2008). Random Field Model

for Integration of Local Information and Global Infor-

mation. PAMI, 30(8):1483–1489.

Vishwanathan, S. V. N., Schraudolph, N. N., Schmidt,

M. W., and Murphy, K. P. (2006). Accelerated Train-

ing of Conditional Random Fields with Stochastic

Gradient Methods. In ICML, pages 969–976.

Yang, L., Meer, P., and Foran, D. J. (2007). Multiple Class

Segmentation using a Unified Framework over Mean-

Shift Patches. In CVPR, pages 1–8.

HIERARCHICAL CONDITIONAL RANDOM FIELD FOR MULTI-CLASS IMAGE CLASSIFICATION

469