ROBOT NAVIGATION MODALITIES

Ray Jarvis

Intelligent Robotics Research Centre, Monash University, Australia

Keywords: Navigation, Modalities.

Abstract: Whilst navigation (robotic or otherwise) consists simply of traversing from a starting point to a goal, there

are a plethora of conditions, states of knowledge and functional intentions which dictate how best to execute

this process in a manageable, reliable, safe and efficient way. This position paper addresses the broad issues

of how a continuum of choices from pure manual or teleoperation control through to fully autonomous

operation can be laid out and then selected from, taking into account the variety of factors listed above and

the richness of live sensory data available to describe the operational environment and the location of the

robot vehicle within it.

1 INTRODUCTION

The dominance of ‘Simultaneous Localisation and

Mapping’ (SLAM) (Leonard, 1991) in recent

publications on robot navigation can give the false

impression that this approach is always the best way

of carrying out this task, largely ignoring the fact

that there are very few situations where such an

approach is either necessary or even feasible, given

normal expectations of prior knowledge and

functional/safety requirements.

As a simple counter example, why would one

want to carry out complex SLAM style navigation in

a building for which exact plans are available?

Alternatively, if a rich database concerning the

geometry and appearance of a reasonably static

environment can be constructed off-line with

accuracy and convenience and this need only be

done once, why not just use this 3D colour rendered

map data for continuing robot operations on a day to

day basis ever more? In the other extreme, in highly

complex and dynamic environments with high risk

potentials, such as robotic bushfire fighting

operations, why not navigate a robotic vehicle under

human teleoperation control to allow the full

judgement of human reasoning to apply throughout

whilst the operator is in a safe and comfortable

place?

There are many other examples between the

extremes described above, each requiring its own

appropriate navigation modality. In what follows the

essentials of robot navigation will be described,

various navigational modalities outlined and a

number of case studies presented for illustration

purposes. Discussion and conclusions then follow.

2 ROBOT NAVIGATION

ESSENTIALS

Six sub-system requirements govern the task of

robot navigation:

(a) Localisation (Jarvis, 1993) concerns the

fixing of the position and pose of the robot vehicle

within its working environment, whether by

following the pre-laid lines on the floor, detecting

beacons or interpreting natural landmarks (or

general environmental metrics and/or appearances).

The less preparation required the better but not at the

expense of overall efficiency, accuracy and safety.

The recent tendency is to try and use on-board

acquired sensory data of the operational

environment with minimal purposeful marking up of

it by way of specific signs.

(b) Environmental mapping concerns the

provision or acquisition of data specifying the

occupancy, geometry, topology or essential nature of

the physical operational environment and sometimes

also the identification of relevant objects within it.

Such a map may assist localization but must also

provide the basis for obstacle avoidance and path

planning.

(c) Path planning (Jarvis, 1994) concerns the

determination of efficient collision-free and safe

295

Jarvis R. (2008).

ROBOT NAVIGATION MODALITIES.

In Proceedings of the Fifth International Conference on Informatics in Control, Automation and Robotics - RA, pages 295-300

DOI: 10.5220/0001505202950300

Copyright

c

SciTePress

paths from start to goal locations or, in some cases, a

coverage pattern of the accessible environment. In

many cases paths can only be constructed

incrementally as environment mapping data is

acquired from on-board sensors (possibly indicating

the location of previously unknown obstacles), if not

provided beforehand.

(d) Motion Control involves the mechanistic

operation of wheels, legs, propellers etc. to drive the

robot along the planned path.

(e) Communication amongst sensors, operator,

computational resources and mechanism

components is also essential. The distribution (and

redundancy) of these provisions on-board and off

(where there might be a remote base station) are

critical to efficiency, timeliness, safety and

reliability.

(f) Function refers to the intended operation,

whether it be directing water at a fire, picking up

suspicious baggage or apprehending a terrorist, or

some other requirement. This aspect is often

neglected or regarded as a “do last” task in the

system design process but should actually be

considered first, not only because the type of

vehicle, its sensory capabilities and its reliability are

dependent on its function but also because the

navigation modality may be less critical than the

manipulation (or some other task required) when the

goal is reached. For example, if the task requires the

close supervision by a remote operator (e.g. in

defusing a bomb) then a sophisticated autonomous

navigation strategy may not be justifiable, even if

possible.

Just how the above six aspects are sensibly

integrated is critically dependent on the functional

requirements, the available prior knowledge of the

environment, the dynamics of the situation and, not

least, on human risk related considerations.

3 NAVIGATION MODALITIES

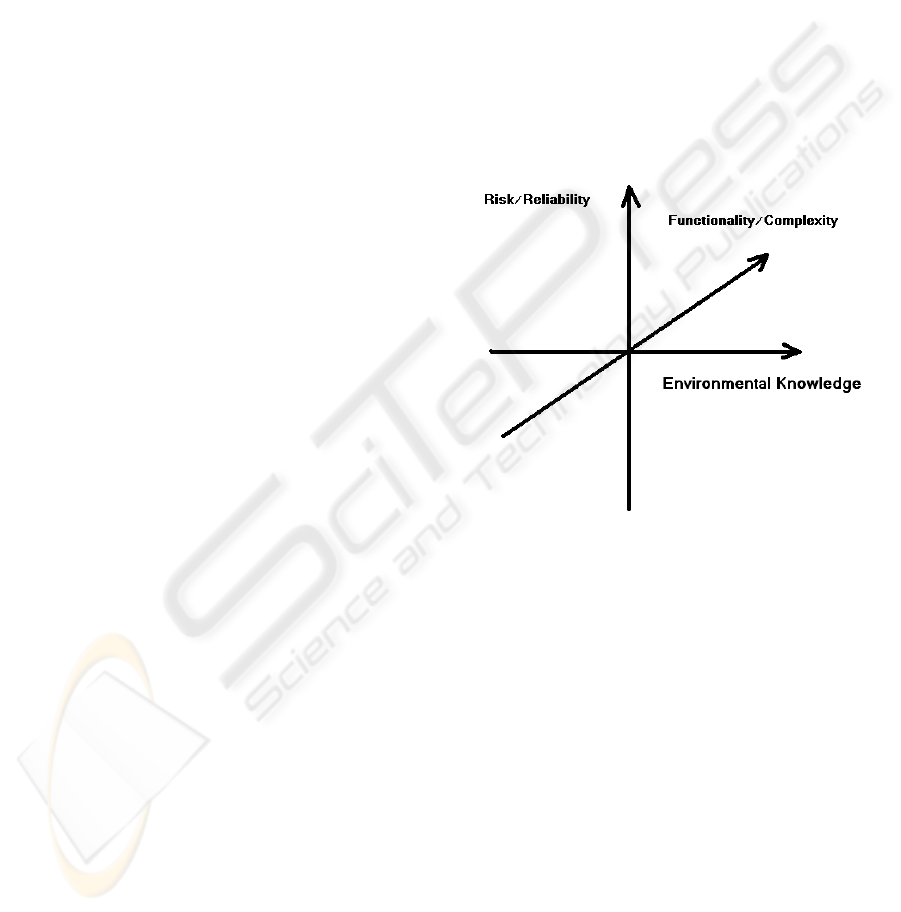

For the sake of structure, three dimensions of the

robot navigation modality choice process can be

identified (See Figure 1):

The first is that of degree of availability of prior

knowledge (e.g. maps, views, 3D geometry) or the

ease with which this can be acquired off-line (e.g.

via laser scanners, stereo views, appearance

mapping, etc.). When environmental knowledge

suitable for supporting robot navigation

(localisation, obstacle avoidance/path planning and

function) is readily available, it makes good sense to

use it as it is likely that such an approach would lead

to better accuracy, reliability and efficiency than

learning such knowledge using on-board sensors

alone.

The second dimension is that of the complexity of

the defined function and whether human agencies

would be required to handle them, whether or not

the pure navigational aspects could be automated to

some degree. For example, if the complex operation

of defusing a bomb via delicate teleoperated

manipulation with rich sensory feedback needs the

application of expert human skill, the necessary

attendance of the expert suggests that the navigation

may as well be by teleoperation also, unless this part

of the overall task is particularly tedious or time

consuming.

Figure 1: Robot Navigation Modality Choice Factors.

The third dimension is that of risk and reliability

requirement factors. For example, having a robot

clean a carpet or mow a lawn fully autonomously to

obviate human tedium makes good sense, since

degrees of unreliability and inefficiency can be

tolerated and very little human risk is involved. On

the other hand, using the bomb defusing example

again, the remoteness of the operator for risk

minimisation is the essential factor and the question

of modality of navigation may be considered

relatively irrelevant, so a flexible mixture of

automation and direct teleoperation may be suitable

for this application. Guiding a fire tanker to a fire

fighting location too hazardous for humans to attend

should perhaps be handled entirely by rich sensor

feedback supported teleoperation, since the safety of

other personnel operating in the vicinity may be

more severely jeopardised if a fully autonomous

system were used, especially as the situation is likely

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

296

to be subject to severe dynamic variation with a

moving fire front, changing wind conditions and the

extent of other fire fighting vehicle and personnel

deployment.

4 FLEXIBLE APPROACH TO

ROBOT NAVIGATION

Rather than accepting one rigidly defined robot

navigation modality along the spectrum from pure

teleoperation to fully autonomous operation, it

makes sense to devise ways in which these extremes

can be moved between gracefully with smooth

variation of the degree of human intervention

applied in a hybrid strategy where levels of

autonomy can be adjusted for particular tasks and

adapt to changing conditions over time. A good

example of this approach is where a disabled person

is using a wheelchair in complex environments with

the aid of robotically inspired sensory and control

mechanisms (Jarvis, 2001). The disabled occupant

may be permitted a user-adaptive degree of control

of the wheelchair within an envelope of safety

provided by the robotic instrumentation which

adjusts the degree of intervention to the capability of

the user to handle the situation over variations of

physical reflex, poor vision, degrees of fatigue etc.

Using a three level control strategy (see Figure 2)

nicely complements the notion of flexible navigation

modality selection. The lowest level can be purely

reaction based collision avoidance through stopping

or minor trajectory adjustments using close range

obstacle sensing as a trigger. The second level can

be thought of as “local guidance” which indicates a

safe passage over a limited range of movement,

generally in the intended direction. The top level is

global and includes complete path planning and

control transition strategies. In the robotic

wheelchair example, the human occupant provides

the top level strategy, the second level provides the

user with steering advice and the lowest level simply

avoids collisions.

In the more general robot navigation situation, the

top level could drift between fully human control via

teleoperation and fully autonomous operation, with

the lower two levels playing their roles in supporting

the global strategy. For example, a teleoperator, like

the wheelchair user, can direct the activities of the

robot using the advice of the second level and

accepting the collision avoidance reaction level as a

safety precaution should his attention stray.

Figure 2: Multi-level Control Heirarchy.

5 CASE STUDIES

The user adaptive robotic wheelchair (Jarvis, 2001)

described above is shown in Figure 3. The user can

indicate navigation intention using human gaze

detection but near collisions impose increasing

degrees of instrument driven navigation

intervention, with control being handed back to the

user gradually as near collision statistics improve.

The main environment sensor is a Erwin Sick laser

range finder. GPS is also provided for guidance as a

non-essential convenience.

Figure 3: User-Adaptive Robotic Wheel Chair.

ROBOT NAVIGATION MODALITIES

297

Figure 4 shows a fully autonomous rough terrain

tracked vehicle (Jarvis, 997) which uses GPS

localisation, laser range finder obstacle mapping,

and Distance Transform (Jarvis, 1994) path

planning. Only the goal location is indicated on an

environmental map which is populated with

obstacles as they are discovered by on-board

sensors. Collision-free optimal paths to the goal are

recomputed on a fairly continuous basis.

Figure 4: Autonomous Rough Terrain Tracked Robotic

Vehicle.

Figure 5 shows an indoor fully autonomous robot

(Jarvis, 1997) which can map its obstacle strewn

environment and continuously replan its paths to a

nominated goal. Localisation is achieved using a

Denning laser bar code reading localiser with bar

code beacons placed at known locations in the floor

plan.

Figure 5: Autonomous Indoor Beacon Localised Robot.

Figure 6(a) shows a teleoperated boom

lift(Jarvis,2006) and Figure 6(b) a teleoperated fire

tanker(Jarvis,2008). Teleoperation is supported by

video cameras, GPS, laser range finders and

pitch/tilt sensors. Figure 7(a and b) shows some of

the types of environmental mapping data available to

the teleoperator.

Figure 6(a): Teleoperated Boom Truck.

Figure 6(b): Teleoperated Fire Tanker.

Figure 8 illustrates a very recent experiment where

detailed off-line environmental mapping (Jarvis,

2007) was carried out using a Riegl LMS-Z420i

laser scanner provided with registered colour

imaging capabilities. Navigation tasks in the

“cyberspace” created by this environmental data

could be replicated in the real physical space from

which the model data was acquired using a physical

robot. The robot could localise itself using

panoramic images which were matched against

images extracted from the pre-scanned “cyberspace”

data. This approach does rely on the prior collection

of detailed environmental data but this process need

only be done once. The generality of this approach

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

298

and the ease of extension into 3D highly

recommends it for situations where prior data

collection can be justified e.g. in public spaces,

malls, air terminals etc.

Figure 7(a): Colour Rendered 3D Environmental Data.

Figure 7(b): Plan View of 3D Laser Range Scan.

Figure 8: Dense Laser/ Colour Vision Environmental Data

Collected Off-Line.

6 DISCUSSION AND

CONCLUSIONS

The idea that robot navigation solutions should be

flexible to span pure teleoperation to fully

autonomous operations with a three level control

strategy and smooth variations of human

intervention is a very practical one, since it can be

adapted to individual situations and changes of

circumstances at will. Also, as new methods,

improved sensor instrumentation and increased

affordable computation come to hand various

aspects of this approach can be tuned so that the

balance of control may shift but the continuum

maintained.

As the quality of SLAM solutions improve,

human/machine interfaces evolve, swarms replace

individual robots on distributed tasks, questions of

risk and responsibilities resolved and co-operative

interplays with human agencies developed,

maintaining the type of flexibility promoted by this

paper becomes even more reasonable and practical,

particularly as the inclusion of this kind of flexibility

does not impose any great additional cost and

provides a graceful degradation path.

In conclusion, this paper has advocated a flexible

approach to the selection of robot navigation

modalities to suit particular circumstances relating to

knowledge, risk, complexity, efficiency and

reliability factors so that working solutions to

important robot application domains can be applied

now and improved in the future without the

stagnation which may result from a more rigid

approach.

REFERENCES

Leonard, J. J., and Durrant-Whyte, H. F.

(1991)Simultaneous map building and localization for

an autonomous mobile robot. In IROS-91 (Osaka,

Japan), pp. 1442-1447.

Jarvis, R.A. (1993) A Selective Survey of Localisation

Methodology for Autonomous Mobile Robot

Navigation, accepted for presentation at the Robots for

Competitive Industry Conference, July 14-16,

Brisbane Australia, pp. 310-317.

Jarvis, R.A.(1994) On Distance Transform Based

Collision-Free Path Planning for Robot Navigation in

Known, Unknown and Time-Varying Environments,

invited chapter for a book entitled 'Advanced Mobile

Robots' edited by Professor Yuan F. Zang World

Scientific Publishing Co. Pty. Ltd. pp. 3-31.

ROBOT NAVIGATION MODALITIES

299

Jarvis, R.A.(2001) A Vision Assisted Semi-Autonomous

user-Adaptive Rough Terrain Wheelchair, Proc. 4th

Asian Conference on Robotics and its Applications, 6

- 8th June, Singapore, pp.45-50.

Jarvis, R.A.(1997)An Autonomous Heavy Duty Outdoor

Robotic Tracked Vehicle, Proc. International

Conference on Intelligent Robots and Systems,

Grenoble, France, Sept. 8-12, pp.352-359.

Jarvis, R.A. (1997) Etherbot - An Autonomous Mobile

Robot on a Local Area Network Radio Tether, Proc.

Fifth International Symposium on Experimental

Robotics, Barcelona, Catalonia, June 15-18, pp.151-163.

Jarvis, R,.(2006) Four Wheel Drive Boom Lift Robot for

Bush Fire Fighting, 10

th

International Symposium on

Experimental Robotics (ISER 2006), July 6-10, Rio de

Janeiro, Brazil.

Jarvis,R.A.,(2008) Sensor Rich Teleoperation Mode

Robotic Bush Fire Fighting, International Advanced

Robotics Program/EURON WS

RISE’2008,International Workshop on Robotics in

Risky Interventions and Environmental

Surveillance,7

th

to 8

th

Jan. Benicassim, Spain.

Jarvis,R.A., Ho,Ngia and Byrne, J.B, (2007 )Autonomous

Robot navigation in Cyber and Real Worlds, Accepted

for presentation, CyberWorlds 2007, Hanover,

Germany, Oct. 24

th

to 27

th

.

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

300