A NEW METHOD FOR VIDEO SOCCER SHOT CLASSIFICATION

Youness Tabii, Mohamed Ould Djibril, Youssef Hadi and Rachid Oulad Haj Thami

∗

Laboratoire SI2M, Equipe WiM, ENSIAS B.P 713, Universit

´

e Mohamed V - Souissi, RABAT - Maroc

Keywords:

Video soccer, shot classification, binary image, golden section.

Abstract:

A shot is often used as the basic unit for both video analysis and indexing. In this paper we present a new

method for soccer shot classification on the basis of playfield segmentation. First, we detect the dominant

color component, by supposing that playfield pixels are green (dominant color). Second, the segmentation

process begins by dividing frames into a 3:5:3 format and then classifying them. The experimental results of

our method are very promising, and improve the performance of shot detection.

1 INTRODUCTION

Since football is the most popular game in the world,

the analysis of its videos has become an important re-

search field that attracts a great number of researchers.

The video document presents audio/visual informa-

tion, thus, making it possible to analyze well this type

of documents and to extract the semantics from the

videos by making use of several algorithms.

The objective of football video analysis is: (1) to

extract events or objects in the scene; (2) to produce

general summaries or summaries for the most impor-

tant moments in which TV viewers may be interested.

The segmentation of playfields, events and objects de-

tection play an important role in achieving the above

described aims. The analysis of football video is very

useful for this game’s professionals because it enables

them to see which team is better in terms of ball pos-

session or to detect which strategy is useful for each

team in a specific moment.

A number of related works which deal with the

extraction of the semantics of soccer videos are avail-

able in the literature. In (D. Yow and Liu, 1995),

the object colour and texture features are employed

to generate highlights and to parse TV soccer pro-

grams (Y. Gong and Sakauchi, 1995). In (J. Ass-

falg and Nunziati, 2003), the authors use playfield

∗

This work has been supported by Maroc-Telecom.

zone classification, camera motion analysis and play-

ers’ position to extract highlights. In (S.C. Chen and

Chen, 2003), a framework for the detection of soccer

goal shots by using combined audio/visual features

was presented. It employs soccer domain knowl-

edge and the PRISM approach so as to extract soc-

cer video data. However, (L.Y. Duan and Xu, 2003)

propose a mid-level framework that can be used to de-

tect events, extract highlights as well as to summarize

and personalize sports video. The information they

employed include low-level features, mid-level repre-

sentations and high-level events. And in (Y. Qixiang

and Shuqiang, 2005), the authors present a framework

based on mid-level descriptors after the segmentation

of the playfield with GMM (Gaussian Mixture Mod-

els). In (J. Assfalg and Pala, 2002), camera motion

and some object-based features are employed to de-

tect certain events in soccer video. In other works,

the authors extract replays, highlights, goals and po-

sitions of players and referee.

In the present paper, the standard RGB colour rep-

resentation is not convenient. The RGB values of de-

coded frames are transformed into corresponding co-

efficients in the HSV colour space, before analysis.

HSV presents three different parameters: hue, satu-

ration and brightness. Hue determines the dominant

wavelength of the colour with values ranging from

0 to 360 degrees. Brightness illustrates the level of

white light (0 - 100) while Saturation describes the

221

Tabii Y., Ould Djibril M., Hadi Y. and Oulad Haj Thami R. (2007).

A NEW METHOD FOR VIDEO SOCCER SHOT CLASSIFICATION.

In Proceedings of the Second International Conference on Computer Vision Theor y and Applications - IFP/IA, pages 221-224

Copyright

c

SciTePress

proportion of chromatic element in a colour. Val-

ues range from 0 to 100, where low values indicate

that the colour has much ”greyness” and will appear

faded. As humans are much more sensitive to hue

than to saturation and brightness, one parameter be-

comes far more important than the others and the

HSV representation is, therefore, excellent for colour

analysis.

In this paper, our algorithm for shot classification

is presented. It exploits the spatial features of bi-

narization as well as the frame partition (W. Kong-

wah and Changsheng, 2003) (A. Ekin and Mehrotra,

2003). This algorithm is able to detect a variety of

shot types with a high percentage.

The remainder of this paper is organized as fol-

lows. Section 2 introduces the algorithm for play-

field segmentation. Section 3 describes the new pro-

posed algorithm for the classification of shots in soc-

cer video. Section 4 presents the experimental results.

Finally, Section 5 gives a brief conclusion.

2 PLAYFIELD SEGMENTATION

In this section, we present the statistical computa-

tion of the dominant colour and binarization (playfield

segmentation).

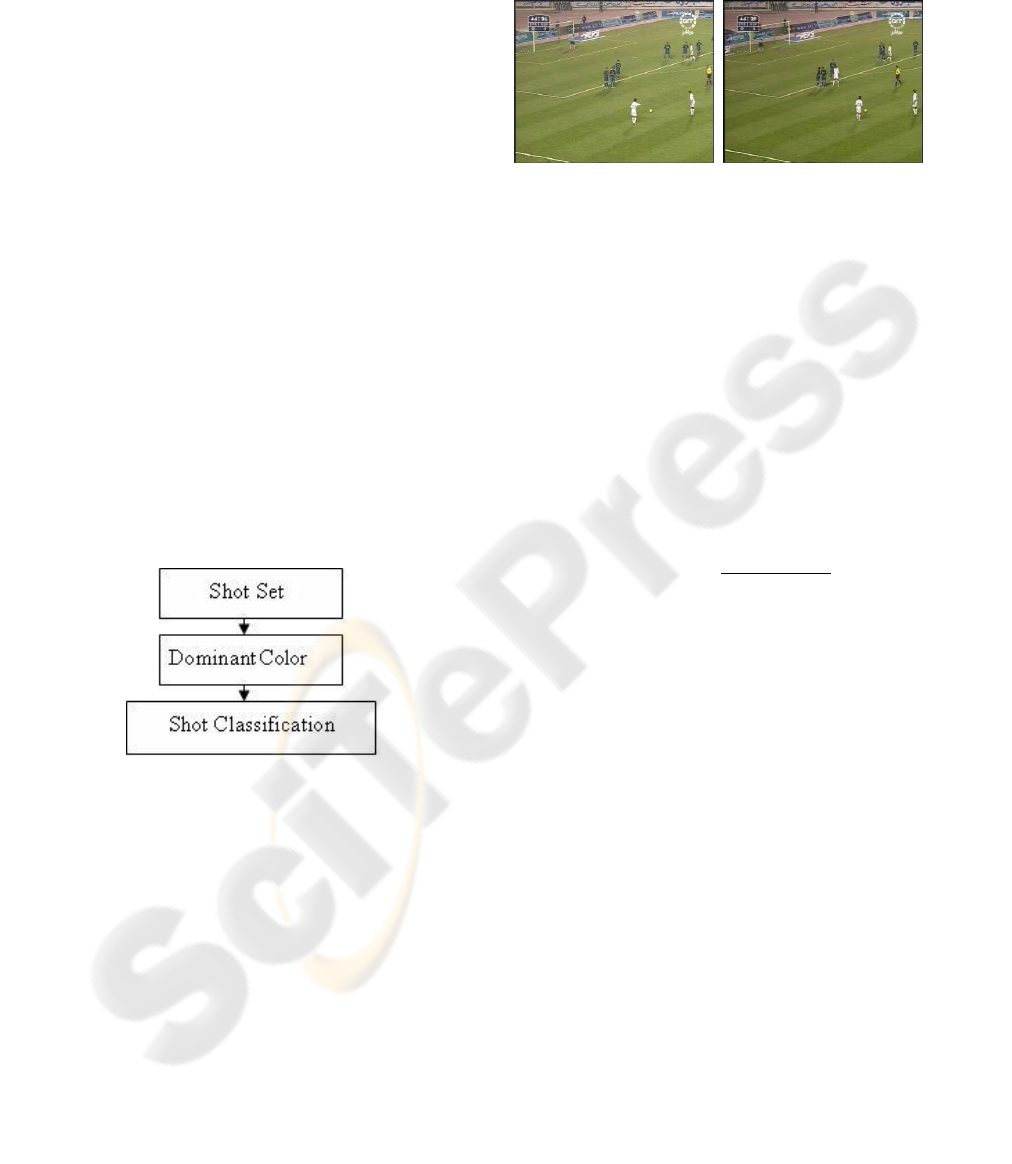

Figure 1: stages for playfield segmentation.

Figure 1 shows the different stages of our proce-

dure for shot classification. The algorithm is com-

posed of three steps : 1) Firstly, we manually ex-

tract the shots that are to be classified. 2) Secondly,

once the shots are extracted, we compute the dom-

inant colour. The latter allows us to characterize the

shots better. 3) Thirdly, to classify the shots , we make

used of binarized frames to distinguish different shots.

2.1 Color Dominant Extraction

The playfield usually has a distinct tone of green that

may vary from stadium to stadium. But in the same

stadium, this green colour may also change due to

weather and lighting conditions (see Figure 2). There-

fore, we do not assume any specific value for the dom-

inant colour of the field.

(a) (b)

Figure 2: weather and lighting conditions.

We compute the statistics of the dominant field

colour in the HSV space by taking the mean value

of each colour component around its respective his-

togram peaks, i

peak

. An interval [i

min

, i

max

] is defined

around each i

peak

. The same method is adopted in

(W. Kongwah and Changsheng, 2003):

i

peak

∑

i=i

min

H[i] <= 2H[i

peak

] and

i

peak

∑

i=i

min

−1

H[i] > 2H[i

peak

] (1)

i

max

∑

i=i

peak

H[i] <= 2H[i

peak

] and

i

max

+1

∑

i=i

peak

H[i] > 2H[i

peak

] (2)

colomean =

∑

i

max

i=i

min

H[i] ∗ i

∑

i

max

i=i

min

H[i]

(3)

Using the following quantization factor: 64 hue,

64 saturation, 128 intensity, H is the histogram for

each colour component (H,S,V). Finally, the colour

mean is then converted into (R

mean

, G

mean

,B

mean

)

space so as to determine the playfield surface :

G(x, y) =

1 if

I

G

(x, y) > I

R

(x, y) + K(G

peak

− Rpeak)

I

G

(x, y) > I

B

(x, y) + K(G

peak

− Bpeak)

|I

R

− Rpeak| < R

t

|I

G

− Gpeak| < G

t

|I

B

− Bpeak| < R

t

I

G

> Gth

0 otherwise

(4)

G(x,y) is the binarized image frame in the field

colour. In our system, the new thresholds after a num-

ber of tests are : R

t

= 12, Gt = 18, B

t

= 10, K = 0.9

and G

th

= 85. the Eq 1-3 are computed for every I/P

frames.

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

222

4 EXPERIMENTAL RESULTS

The video sequences used for the evaluation of the

shot classification algorithm are MPEG compressed

movies. This will allow us to test our algorithm on

MPEG artificats due to the compression. The se-

quences also contain objects and camera motion.

About 6 hours video sequences of various soccer

matches in different champions leagues transcoded

into MPEG 352x288, 1150kbps are used.

These video files are first parsed by using a man-

ual shot detection. The two visual features (i.e. bina-

rization and Golden section) are computed and nor-

malized for each video shot. In total, we have 435

Long shots, 162 Medium shots and 220 close-up

shots.

Table 1: Results of shot classification algorithm.

Semantic True Shot False Shot Precision(%)

LS 423 12 97.2

MS 125 10 93.8

CpS 207 13 94.0

Total 782 35 95.0

Where LS is Long Shot, MS is Medium shot and CpS

is Close-up Shot.

Table 1 shows the result we obtained for shot clas-

sification. The classification rate of LS is high, but for

MS and CpS it is relatively low. This is maybe due

to the pre-fixed thresholds of Linemean and Colunm-

mean. In other words, the features are less discrimi-

nant for those types of shots. However our algorithm

works satisfactorily.

5 CONCLUSION

In this paper we presented a new method for the clas-

sification of video soccer shots on the basis of spatial

analysis. The main contribution of the presented work

an algorithm for shots classification.

The advantage of our algorithm is clearly seen in

its simplicity and effectiveness in providing better re-

sults for the classification of the majority of football

matches. Besides, the analysis of soccer video on the

basis of playfield segmentation is very promising.

REFERENCES

A. Ekin, A. T. and Mehrotra, R. (2003). Automatic soccer

video analysis and summarizartion. IEEE, Symp.

D. Yow, B.L. Yeo, M. Y. and Liu, B. (1995). Analysis and

presentation of soccer highlights from digital video.

ACCV.

J. Assfalg, M. Bertini, A. B. W. N. and Pala, P. (2002). Soc-

cer highlights detection and recongnition using hmms.

IEEE.

J. Assfalg, M. Bertini, C. C. A. B. and Nunziati, W.

(2003). Semantic annotation of soccer videos: auto-

matic highlights identification. Computer Vision and

Image Understanding.

L.Y. Duan, M. Xu, T. C. Q. T. and Xu, C. (2003). Amid-

level representation framwork for semantic sports

video analysis. ACM.

S.C. Chen, M.L. Shyu, C. L. L. and Chen, M. (2003). De-

tection of soccer goal shots using joint multimedia

features and classification rules. Proceedings of the

Fourth International Workshop on Multimedia Data

Minig, ACM.

W. Kongwah, Y. Xin, Y. X. and Changsheng, X. (2003).

Real-time goal-mouth detection in mpeg soccer video.

ACM, Berkley, California, USA.

Y. Gong, L.T. Sin, C. S. H. Z. and Sakauchi, M. (1995).

Automatic parsing of soccer programs. IEEE, Syst.

Y. Qixiang, H. Qingming, G. W. and Shuqiang, J. (2005).

Exiting event detection in braodcast soccer video with

mid-level description and incremental learning. Tech-

nical report, MM05,,Singapore, ACM.

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

224