EXPLOITING MOBILE DEVICES TO SUPPORT MUSEUM

VISITS THROUGH MULTI-MODAL INTERFACES

AND MULTI-DEVICE GAM

Carmen Santoro

ruzzi 1, Pisa, Italy

ES

Fabio Paternò and

ISTI-CNR, Via Mo

Keywords: Museum Mobile Guides, Multi-Modal Interfaces, Multi-Device Games.

Abstract: Mobile devices are enabling innovative ways to enjoy museum settings. In particular, their multimodal

interfaces can provide unobtrusive support while users are freely moving and new applications, such as

games, can benefit from the combined availability of mobile and stationary devices. In this paper we report

on our new solutions and experiences in the area of mobile support for museum visits.

1 INTRODUCTION

Seamless interoperability of intelligent computing

environments and mobile devices is becoming more

and more popular in various application domains. A

potential application area is the so-called “intelligent

guides”. Several location-aware, context-aware and

multi-modal prototypes have been developed since

the beginning of ubiquitous computing in the early

90’s, see for example (Oppermann and Specht,

2000).

In this paper, we present our recent solutions in

this area, by extending a previous museum guide

(Ciavarella and Paternò, 2004), which already

supported graphical and vocal modalities and

location detection through infrared beacons.

To this end, we have designed an interaction

technique based on two important concepts:

i) it should not be intrusive on the user

experience, by leaving the visual channel open

to enjoy the artwork;

ii) it should be able to somehow directly interact

with the available physical objects.

The first concept is motivated by previous

analysis of museum visitors and how they perceive

the support of computer-based devices. The results

clearly indicated that the user would not be

interested in spending much time understanding how

the electronic guide works, especially because they

will probably not visit the museum again. On the

other hand, the information usually provided by

museums regarding artworks is rather limited (e.g.:

mainly short textual labels), which raises the need

for additional support to be dynamically activated

when something interesting is found during the visit.

For this purpose, it would be useful for visitors to

have the possibility of pointing at the artwork of

interest and controlling audio information with small

hand gestures while still looking at the artwork.

In addition, a museum visit is often an individual

experience, even electronic guides and interactive

kiosks are usually not designed to promote social

interaction to increase user experience. In this

context, games for mobile guides can provide an

interesting and amusing way to enrich users

interaction and promote their collaboration. The use

of shared large displays can enable further

functionality, such as presenting the visitors’

position and different ways to represent individual

and cooperative games exploiting the large screen.

The paper is structured in the following way: we

first introduces related work in the area of mobile

guides, then we describe the original Cicero guide

and its main features. Next we discuss how we have

extended it in order to support innovative interaction

techniques through RFIDs and accellerometers.

Then, we describe another extension, which

provides the possibility of several games that can be

played by multiple players using both mobile and

fixed devices. Lastly, we draw some conclusions and

provide indications for future work.

459

Paternò F. and Santoro C. (2007).

EXPLOITING MOBILE DEVICES TO SUPPORT MUSEUM VISITS THROUGH MULTI-MODAL INTERFACES AND MULTI-DEVICE GAMES.

In Proceedings of the Third International Conference on Web Information Systems and Technologies, pages 459-465

DOI: 10.5220/0001292704590465

Copyright

c

SciTePress

2 RELATED WORK

The museum domain has raised an increasing

interest regarding the support that can be provided to

visitors through mobile devices. One of the first

works in this area was the Hippie system

(Oppermann and Specht, 2000), which located users

via an IR system with beacons installed at the

entrance of each section and emitters installed on the

artworks. The GUIDE project (Cheverst et al., 2000)

addresses visitors in outdoor environments

supported through several WLANs. In our work, we

focus on indoor visitors: it requires consideration of

different solutions for supporting them. While such

works provided a useful contribution in the area, we

noticed that in the museum domain innovative

interaction techniques were not yet investigated.

They can be useful to improve user experience by

making the interaction more natural.

Research on gesture interaction for mobile

devices includes various types of interactions:

measure and tilt, discrete gesture interaction and

continuous gesture interaction.

Our study differs from related work since we

extend the tilt interaction by associating tilt events

with a few easy-to-use interaction commands for the

museum guide, and combine such tilt interaction

with other modalities, following a consistent

interaction logic at different levels of the

application.

Physical browsing (Valkkynen et al., 2003)

allows users to select information through physical

objects and can be implemented through a variety of

tag-based techniques. Even mobile phones that can

support it through RFIDs have started to appear on

the market. However, we think that it needs to be

augmented with other techniques in order to make

museum visitors’ interaction more complete and

natural. To this end, we have selected the use of

accelerometers able to detect tilt events, allowing

users to easily select specific information regarding

the artwork identified through physical pointing with

small hand movements, so that the user visual

channel can be mainly dedicated to looking at the

artworks.

The area of mobile guide support has received a

good deal of attention, see for example the Sotto

Voce project (Grinter et al., 2002). In this paper we

want to also present a novel solution able to exploit

environments with stationary and mobile devices,

equipped with large and small screens. There are

various types of applications that exploit such

environments (Paek et al., 2004):

“jukebox” applications, use a shared screen as

a limited resource shared among multiple

users (see Pick-and-drop interaction

paradigm);

collaborative applications, allow multiple

users to contribute to the achievement of a

common goal).

communicative applications, simplify

communication among individuals, see

Pebbles (Myers et al., 1998).

“arena” applications, support competitive

interactions among users.

CoCicero (Laurillau and Paternò, 2004) allows

collaborative games and interaction among visitors:

one feature is to individually solve games, thereby

enabling parts of a shared game, and supporting a

common goal. This new environment aims to

support multi-user interaction and cooperation in the

context of games for improving the learning of

museum visitors.

3 CICERO

Our interaction technique for museum visitors has

been applied to a previously existing application for

mobile devices: Cicero (Ciavarella and Paternò,

2004). This is an application developed for the

Marble Museum located in Carrara, Italy and

provides visitors with a rich variety of multimedia

(graphical, video, audio, …) information regarding

the available artworks and related items (see Figure

1).

Figure 1: User in the Marble Museum with CICERO.

This application is also location-aware. This is

implemented through a number of infrared beacons

located on the entrance of each museum room. Each

of them is composed of several infrared emitters and

generates an identifier that can be automatically

detected by the application, which thus knows what

room the user is entering and immediately activates

WEBIST 2007 - International Conference on Web Information Systems and Technologies

460

the corresponding map and vocal comments. This

level of granularity regarding the location (the

current room) was considered more flexible and

useful than a finer granularity (artwork), which may

raise some issues if it used to drive the automatic

generation of the guide comments.

In addition to information regarding artworks,

sections and the museum, the application is able to

support some services such as showing the itinerary

to get to a specific artwork from the current location.

Most information is provided mainly vocally in

order to allow visitors to freely look around and the

visual interface is mainly used to show related

videos, maps at different levels (museum, sections,

rooms), and specific pieces of information.

4 THE SCAN AND TILT

INTERACTION PARADIGM

Digital metadata on artwork facilitates electronic

guides for museums (e.g. it is easier to arrange

temporary exhibitions when metadata can be made

available to electronic guides). Also, our concept of

guide interaction potentially benefits from the fact

that artwork metadata can be structured in a nested,

tree-type structure.

The scan modality operates on a higher level (i.e.

it can be used to choose the main element of

interest), while the gesture modality enables

operations between elements in the metadata

structure (i.e. horizontal tilt allows navigating

among pieces of information at the same level).

These aspects make the interaction more immediate

and potentially easier for visitors to orient

themselves within the information presented by the

device.

When a visitor enters a space, this is detected

through the infrareds signals, and a map of the room

is provided automatically.

A visitor then scans a RFID tag associated with

an object by physical selection, and the object is

highlighted graphically on the room map. That is,

information on a mobile device is associated to an

object in the physical environment. In the detailed

data-view, navigation among different pieces of

information can be done by tilting horizontally.

Alternatively, when users enter a room and get

information regarding it, they can use the tilt to

identify/select different artworks in the room

through simple horizontal tilts. Whenever a new

artwork is selected, then the corresponding icon in

the room map is highlighted and its name is vocally

rendered. In order to access the corresponding

information, a vertical tilt must then be performed.

Note that the current interpretation of the tilt event

can also be enabled/ disabled through a PDA button.

Figure 2: Example of scan and tilt interaction.

In general, the tilt interface (see Figure 2)

follows a simple to learn pattern: horizontal tilts are

used to navigate through different pieces of

information at the same level or to start/stop some

activity, vertical tilt down events are used to go

down in the information hierarchy and access more

detailed information, whereas vertical tilt up events

are used to get up in the information hierarchy.

Since there are different levels of information

supported (the museum, the thematic sections, the

artworks, and the information associated to specific

artworks and its rendering,.), when a specific

artwork is accessed, it is still possible to navigate by

horizontal tilting to access voice control (to

decrease/increase the volume), control the associated

video (start/stop), and access information regarding

the author.

The scan modality is used to orient users in a

physical environment and to select data on a higher

level. After summary information is provided on the

mobile device, gesture modality is used to navigate

between various views/levels of detail by tilting.

Tilt RIGHT:

next object

Tilt LEFT:

prev object

Tilt DOWN:

select object

RFID reader (SCAN action)

Accelerometer

(TILT action)

EXPLOITING MOBILE DEVICES TO SUPPORT MUSEUM VISITS THROUGH MULTI-MODAL INTERFACES

AND MULTI-DEVICE GAMES

461

When users receive scan data through the RFID

manager, it is communicated to the museum

application, and when the device is tilted, the tilt

manager feeds the tilt data to the application

accordingly.

The gesture modality in our approach utilizes 2D

acceleration sensor hardware from Ecertech. The

sensor hardware is attached to an iPAQ PDA with

Pocket PC operating system and can be used also in

other Pocket PC PDAs and SmartPhones. The sensor

produces signals that are interpreted as events

(TiltLeft, TiltRight, TiltBackward, TiltForward) by

the tilt manager-data processing module of the

mobile device. User-generated tilt-based events can

then be used to execute interactions (selection,

navigation or activation) according to the user

interface at hand.

The scan modality employs RFID technology by

Socket Communications. The RFID reader (ISO

15693) is connected to the Compact Flash socket of

a PDA. Artworks in the museum environment are

equipped with RFID-tags containing identifiers of

the given artwork. A user can obtain information

related to a work of art by placing the device near

the tag and having the data associated with the tag

code passed to the guide application through the

RFID reader hardware.

The first release of the software prototype uses a

simple tilt monitoring algorithm based on static

angle thresholds and taking into account the initial

tilt angle of the device when the application starts.

The tilt of both horizontal and vertical axes is

measured every 1/10 second. These values are then

compared to the original tilt measurement performed

at application start-up time, and if a 15 degree

threshold is exceeded for over 500ms in one of the

axes, this is interpreted as the appropriate tilt gesture

for that axis: 'forward', 'backward', 'left' or 'right'.

Although the algorithm might be deemed as a simple

solution, its usage has allowed us to obtain valuable

preliminary good user feedback (see “Evaluation”

section).

5 MULTI-USER INTERACTION

THROUGH GAMES

As we introduced before, another extension of

Cicero museum guide deals with allowing

collaborative games and interaction among museum

visitors. In CoCicero the objective is to individually

solve games, thereby enabling parts of a shared

game, and supporting a common goal. This

environment aims to support multi-user interaction

and cooperation in the context of games for

improving the learning of museum visitors.

The environment supports both individual and

cooperative games. Users are organised in groups.

Each user is associated with a name and a colour.

The environment supports five types of individual

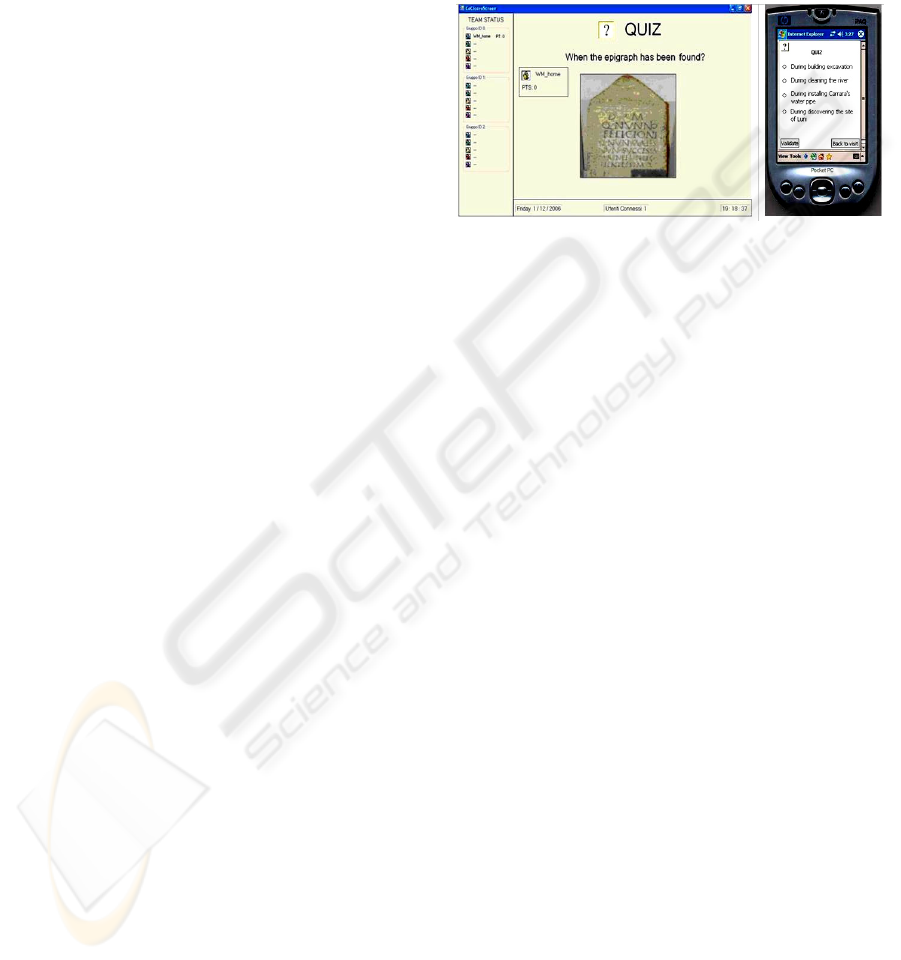

games (see Figure 3):

The quiz is a question with some possible

answers. For each right answer the user gets

two points and one piece is removed from the

shared enigma.

In the associations games users must

associate images with words, eg the author of

an artwork, or the material of an artwork.

In the details game an enlargement of a small

detail of an image is shown. The player must

guess which of the artwork images the detail

belongs to.

The chronology game requires the user to

order chronologically the images of the

artworks shown according to the creation date.

In the hidden word game, the users have to

guess a word: the number of characters

composing the word is shown to help the user.

In all types of exercises, when the user solves the

problem then points are increased and a piece is

removed from the puzzle associated with the shared

enigma. The social games such as the shared enigma

are an important stimulus to cooperation.

The enigma is composed of a series of questions

on a topic associated with the image hidden by the

Figure 3: Five types of games supported.

WEBIST 2007 - International Conference on Web Information Systems and Technologies

462

puzzle. At any time a player can solve the shared

enigma: for each correct answer, one piece is

removed from the puzzle, and the group earns seven

points. This favours numerous groups (the maximum

is five members) because they can earn more points,

and it is a stimulus to cooperate. When a member of

the group answers the corresponding question, such

question is no longer available also to the other

players in the team. This stimulates an interaction

among visitors so that they can first discuss the

answer and agree on it. The PDA interface of the

shared enigma has two parts: the first one shows the

current player’s scores and the hidden puzzle image,

the second shows the questions (with possible

answers).

One important feature of our solution is to

support game applications exploiting both mobile

and stationary devices. The typical scenario is users

freely moving and interacting through the mobile

device, who can also exploit a larger, shared screen

of a stationary device when they are nearby. This

improves user experience, otherwise limited to an

individual mobile interaction, and also stimulates

social interaction and communication with other

players, although they may not know each other.

A larger shared screen extends the functionality

of a mobile application enabling the possibility to

present individual games differently, to share social

games representations, and show the position of the

other players in the group. Since a shared display

has to go through several states, the structure of its

layout and some parts of the interface (e.g.: date,

time, number of connected players and list of users

with associated scores) remain unchanged so as to

avoid user disorientation. A game can be shown in

two different modes, selectable through the PDA

interface: normal and distributed. In the normal

mode the PDA interface does not change while on

the large screen a higher resolution image of the

game is shown along with the player state. This

representation is used to focus the attention of

multiple users on a given game exploiting the screen

size. In the distributed mode, the possible answers

are shown only on the PDA, while the question and

a higher resolution image are on the larger screen.

The result of the user answer is shown only on

the PDA interface. In case of social games (e.g. the

shared enigma) if only the PDA is used, the shared

enigma interface visualises sequentially two

presentations (the hidden image and the associated

questions) on the PDA; if the shared screen is

available, the hidden image is shown on it, while the

questions are on the PDA (see Figure 4).

Providing an effective representation of players’

position on the PDA is almost impossible, especially

when they are in different rooms. Thus, the large

shared screen is divided into sections, one for each

player. Each part shows the name and the room

where the player is located and a coloured circle

shows the last work accessed through his/her PDA.

Figure 4: Example of game interface distribution.

5.1 Software Architecture

The main elements of the software architecture are

the modules installed in the mobile devices, in the

stationary device and the communication protocol

designed for the environment. The PDA module is

composed of four different layers, each providing

the other layers with services according to specific

interfaces. From the bottom they are:

Core, which provides the basic mechanisms;

Communication, implements the network

services for sending/receiving messages;

Visit, supports interactive access to museum

info;

Games, supports the interactive games.

In particular, the core implements data structures

useful for the upper layers, e.g. support for

configuration and help, and the XML parsers. It also

contains the concurrency manager of IRDA signals

(infrared signals used to detect when the user enters

a room). The communication layer provides

functionality used to update the information

regarding the state of the players, to connect to

shared stationary displays and to exchange

information among palmtops, and therefore

implements algorithms for managing sockets,

messages, and group organisation. Such layer is

exploited by the visit and game layers. The visit

layer supports the presentation of the current room

map and a set of interactive elements. Each artwork

is associated with an icon identifying its type

(sculpture, painting, picture, …), and positioned in

the map according to its physical location. By

selecting such icon, users can receive detailed

EXPLOITING MOBILE DEVICES TO SUPPORT MUSEUM VISITS THROUGH MULTI-MODAL INTERFACES

AND MULTI-DEVICE GAMES

463

information on the corresponding artwork. In

addition, this part of the application allows users to

receive help, access videos, change audio

parameters, and obtain other info.

The games layer has been designed to extend the

museum visit application. The artworks that have an

associated game show an additional white icon with

a “?” symbol, through which it is possible to access

the associated game. If the game is solved correctly

the icon turns green, otherwise it becomes red. In

addition, an additional menu item enabling access to

social games, such as the shared enigma, is available

to the user, and the scores are shown in the top left

corner. The games are defined through XML-based

representations, so as to allow easy modifications

and additions. The game layer exploits the parser

implemented at the core level and the services

provided by the communication layer to inform all

players of the score updating.

The module associated with the stationary

devices is structured into layers as a server:

The core, provides basic data structures, a

monitor to synchronize threads, and a parser.

The communication manager receives and

sends messages to the mobile devices,

monitors messages in order to update their

scores;

The UI manager updates the information

presented according to the messages received.

The system uses a peer-to-peer protocol, which

has been designed for the target environment. The

players and the associated PDAs can organise

themselves in groups without the support of

centralised entities through a distributed algorithm.

All the devices (both mobile and stationary)

monitor a multicast group without the need to know

the IP addresses of the other devices. When

messages are received, the PDAs check whether

they are the target devices: if so, they send the

corresponding answer, otherwise the message is

discarded. The connection with the fixed devices is

performed through TCP by a dedicated socket in

uni-directional manner (from the mobile devices to

the stationary one). The responses from the fixed

device are confirmation or failure messages, relevant

for all players and are thus sent through the multicast

group.

6 EVALUATION

We performed first evaluations of the extensions of

the prototype we described in the previous sections

The tests involved more than 10 people recruited in

the institute community.

Before starting the exercise, users were

instructed to read a short text about the application

Then, a short description about the task that they

were expected to carry out was provided. After

carrying out the exercise, users were asked to fill in

a questionnaire, which was divided into two parts. In

the first part some general information was

requested by the user (age, education level, level of

expertise on using desktop/PDA systems, etc.). The

second part was devoted to questions more

specifically related to the exercise.

6.1 The Scan and Tilt

As far as the scan and tilt paradigm is concerned,

users were asked to scroll a number of artworks

belonging to a specific section (main window); then,

they were expected to select one artwork (secondary

window) and navigate through the different pieces of

information available (e.g.: author, description,

image, ...), to finally get back to the initial window

in order to finish the exercise.

People involved in the tests reported to be, on

average, quite expert in using desktop systems, but

not particularly expert in using PDAs. Roughly half

of them had already used a PDA before the exercise

(7/12), only a few reported to have ever heard about

scan and tilt interaction. On average they judged

scan and tilt useful, with interesting potentialities.

The majority of them (8/12) reported some

difficulties in performing the exercise. Only 2

reported no difficulty, while other 2 reported many

difficulties. People that experienced difficulties,

generally self-explained this fact saying that it could

have been motivated by the novelty of the technique

and their complete lack of experience with such an

interaction technique.

As for the kind of difficulties encountered, there

were aspects connected to the initial difficulty in

using the technique and understand the tilt

thresholds expected for activating the tilt event, but

most of these problems diminished after the initial

interaction phases. Vertical tilt was found to be the

most difficult interaction, while horizontal tilt was

found the easiest one for the majority of users.

Almost all the users judged the application user

interface to be clear (in a 1-5 scale, only one

reported a value of 2, whereas the others reported 4

or 5). Users judged scan and tilt interaction fairly

easy to use (on average, the mean value was 3 in a 1-

5 scale) and in fact several of them pointed out that

it is just a matter of time to get used to it. They

WEBIST 2007 - International Conference on Web Information Systems and Technologies

464

judged that scan and tilt makes interaction slightly

easier with respect to traditional graphical interfaces,

even if they conceded that it would be quite difficult

to use it without looking at the PDA. All in all the

feedback was positive, even if we are aware that

more empirical test is needed in order to draw

definite conclusions.

6.2 Collaborative Games

As far as the collaborative games are concerned,

users were asked first to access (and possibly solve)

at least one example of each different type of game,

then try to solve the shared enigma exploiting the

large screen emulated on the screen of a desktop PC.

People involved in the tests reported to have, on

average, a medium (3/5) experience in using PDAs.

Although they judged the application especially

suitable for schoolchildren, the general feedback

about the application was very good: in a 1-5 scale,

the games were judged amusing (4), intuitive (3.9),

helping the learning process (4.2), and successful in

pushing people collaborate and socialise. The UI of

individual games was rated good (4,3/5), as well as

the way in which the functionality was split between

the PDA and the large screen (4.7/5); also the UI

supporting such splitting was rated very good

(4,8/5). Testers were also asked to report the games

they liked most and those they appreciated less: the

detail game collected the highest number of positive

feedbacks (it was not surprising as it was the game

which enabled the user to select the answer from a

very limited set (3) of possible answers, thus with a

good probability of success even with a limited

knowledge about the artworks). Also not

surprisingly, the hidden word was the game users

liked less, self-explained by them by the fact that

this game is more difficult as it required more

knowledge since it is a quite open question (only the

length of the answer is disclosed to the user) and it

also requires the user to enter a word (and text

editing is not very easy on a PDA, especially for

users with little dexterity with such devices). All

agreed that the large screen facilitates collaboration.

7 CONCLUSIONS

We have reported on recent experiences in

exploiting mobile technologies for supporting

museum visitors. They provide useful indications

about important aspects to consider: multimodal

interfaces, including use of RFID technologies and

games in multi-device environments able to exploit

both fixed and stationary devices.

Future work will be dedicated to identifying

new ways for promoting socialization and

cooperation between visitors. Further work is also

planned for improving the algorithm that manages

the scan-and-tilt interaction paradigm, in order to

enable a more natural interaction with the device.

REFERENCES

Ailisto, H., Plomp, J., Pohjanheimo, L., Strömmer E.: A

Physical Selection Paradigm for Ubiquitous

Computing. Proceedings EUSAI 2003: 372-383.

Cheverst K, Davies N, Mitchell K, Friday A, Efstratiou C

(2000) Developing a context - aware electronic tourist

guide: some issues and experiences. In: Proceedings of

CHI 2000, ACM Press, The Hague, The Netherlands,

pp 17–24.

Ciavarella, C., Paterno F., The design of a handheld,

location-aware guide for indoor environments

Personal Ubiquitous Computing (2004) 8: 82–91.

Grinter, R.E. Aoki, P.M. Hurst, A. Szymanski, M.H.

Thornton J.D. and Woodruff A., Sotto Voce:

Exploring the Interplay of Conversation and Mobile

Audio Spaces. Proc. Of CHI, Minneapolis (2002).

Laurillau, Y., Paternò, F., Supporting Museum Co-visits

Using Mobile Devices, Proceedings Mobile HCI 2004,

Glasgow, September 2004, Lecture Notes Computer

Science 3160, pp. 451-455, Sprinter Verlag.

Mäntyjärvi, J., Kallio, S., Korpipää, P., Kela, J., Plomp, J.,

Gesture interaction for small handheld devices to

support multimedia applications, In Journal of Mobile

Multimedia, Rinton Press, Vol.1(2), pp. 92 – 112,

2005.

Myers B. A., Stiel H., Gargiulo R. - Collaboration Using

Multiple PDAs Connected to PC, in Proceedings of

CSCW, Seattle, USA (1998).

Oppermann R, Specht M (2000) A context-sensitive

nomadic exhibition guide. In: The proceedings of

symposium on handheld and ubiquitous computing,

LNCS 1927, Springer, pp 127–142.

Paek T., Agrawala M., Basu S., Drucker S., Kristjansson

T., Logan R.,Toyama K., Wilso A. - Toward Universal

Mobile Interaction for Shared Displays. Proc. of

CSCW’04, Chicago, Illinois, USA (2004)

Rekimoto J., Tilting operations for small screen interfaces,

ACM UIST 1996, 1996., pp. 167-168.

Valkkynen P., Korhonen I., Plomp J., Tuomisto T.,

Cluitmans L., Ailisto H., Seppa H., A User Interaction

Paradigm for Physical Browsing and Near-object

Control Based on Tags, Physical Interaction ’03 –

Workshop on Real World User Interfaces, in

conjunction with Mobile HCI’03.

EXPLOITING MOBILE DEVICES TO SUPPORT MUSEUM VISITS THROUGH MULTI-MODAL INTERFACES

AND MULTI-DEVICE GAMES

465